-

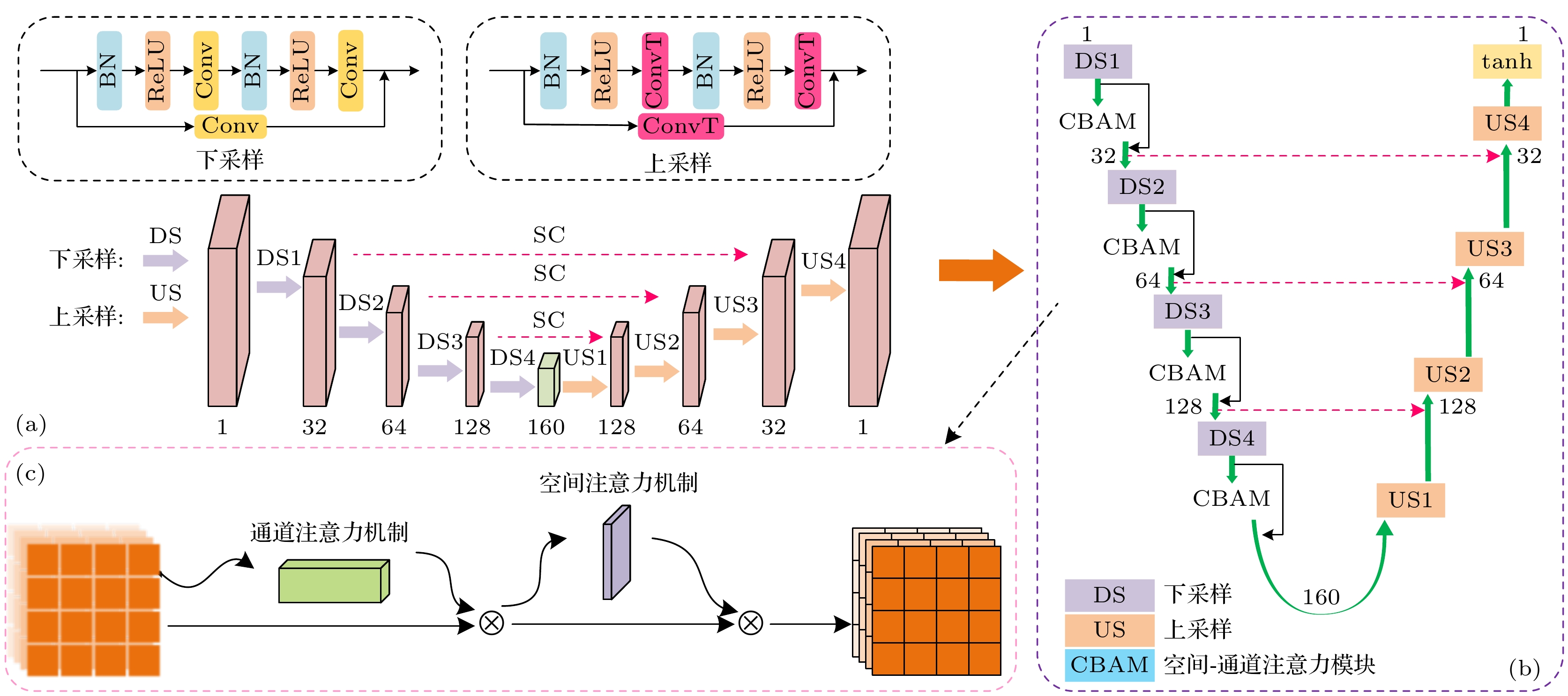

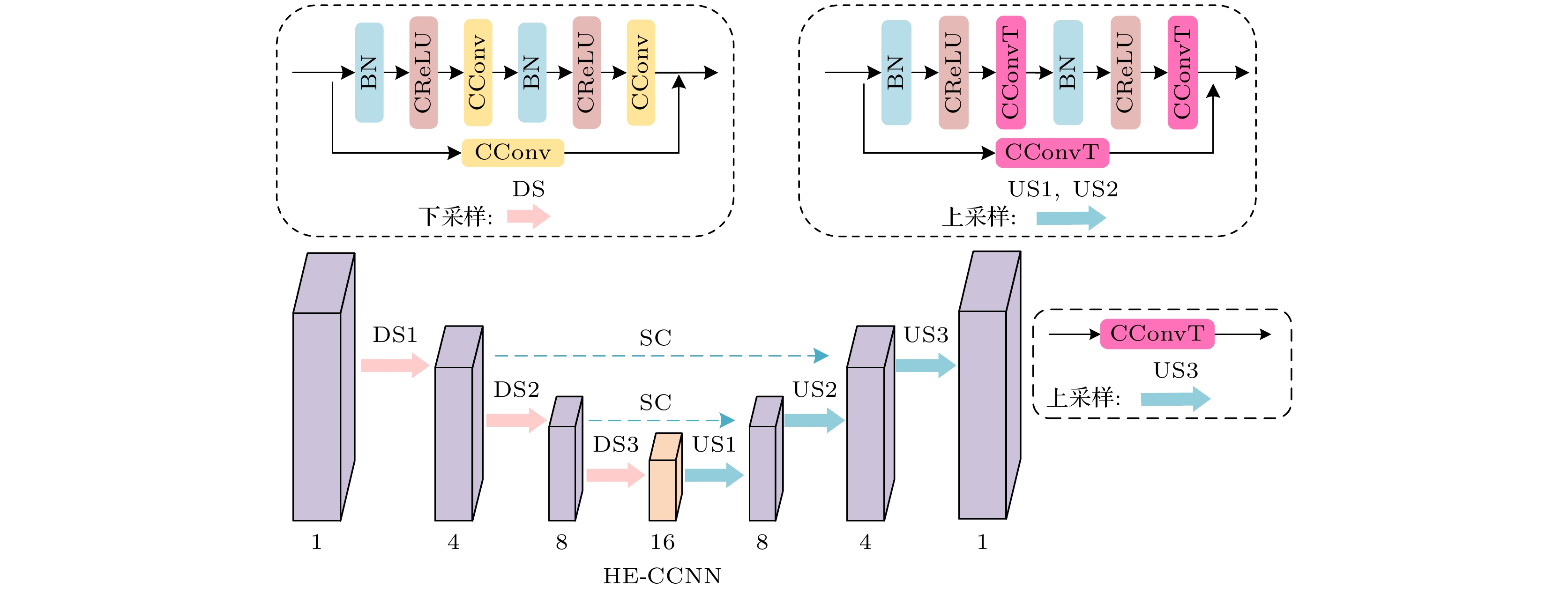

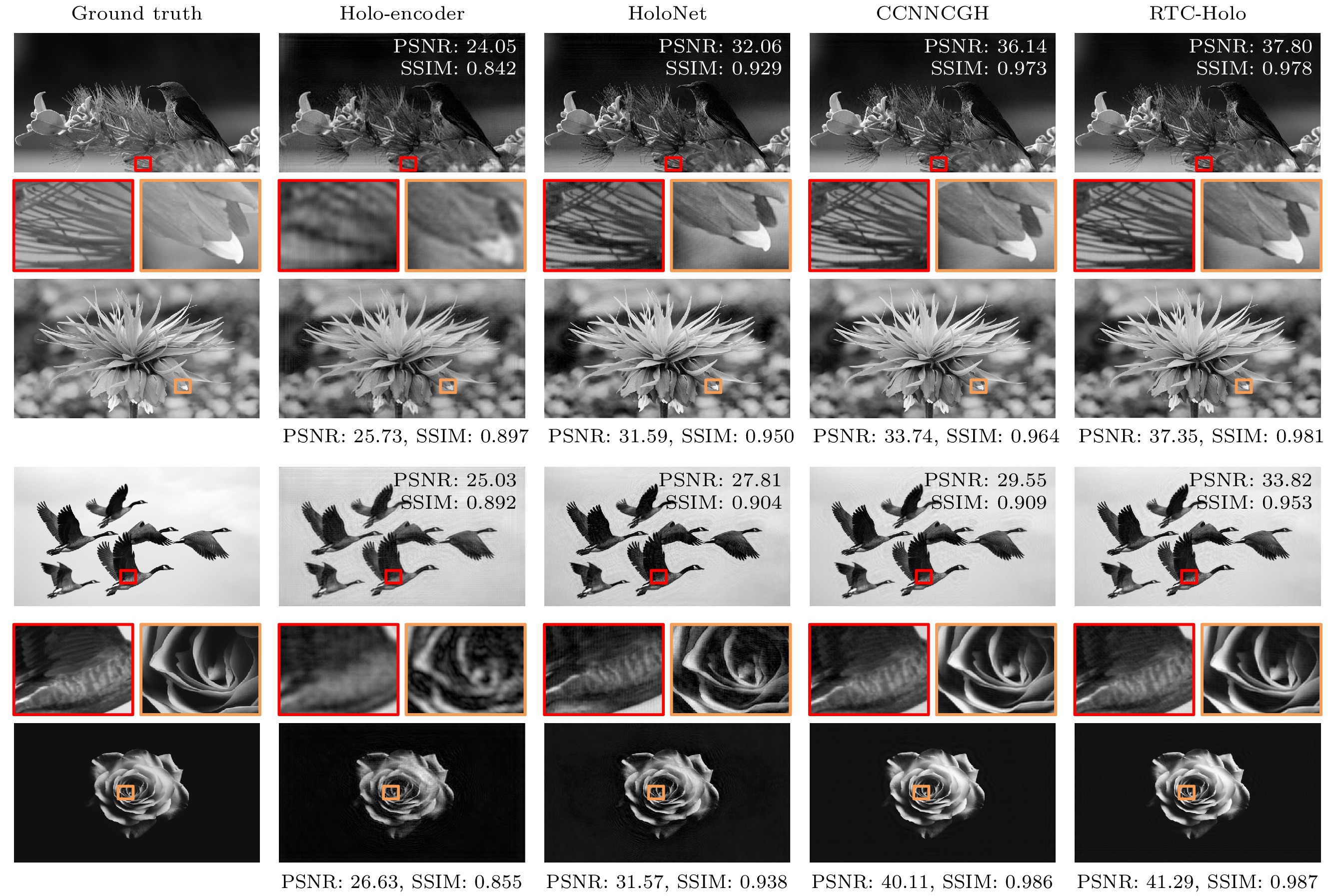

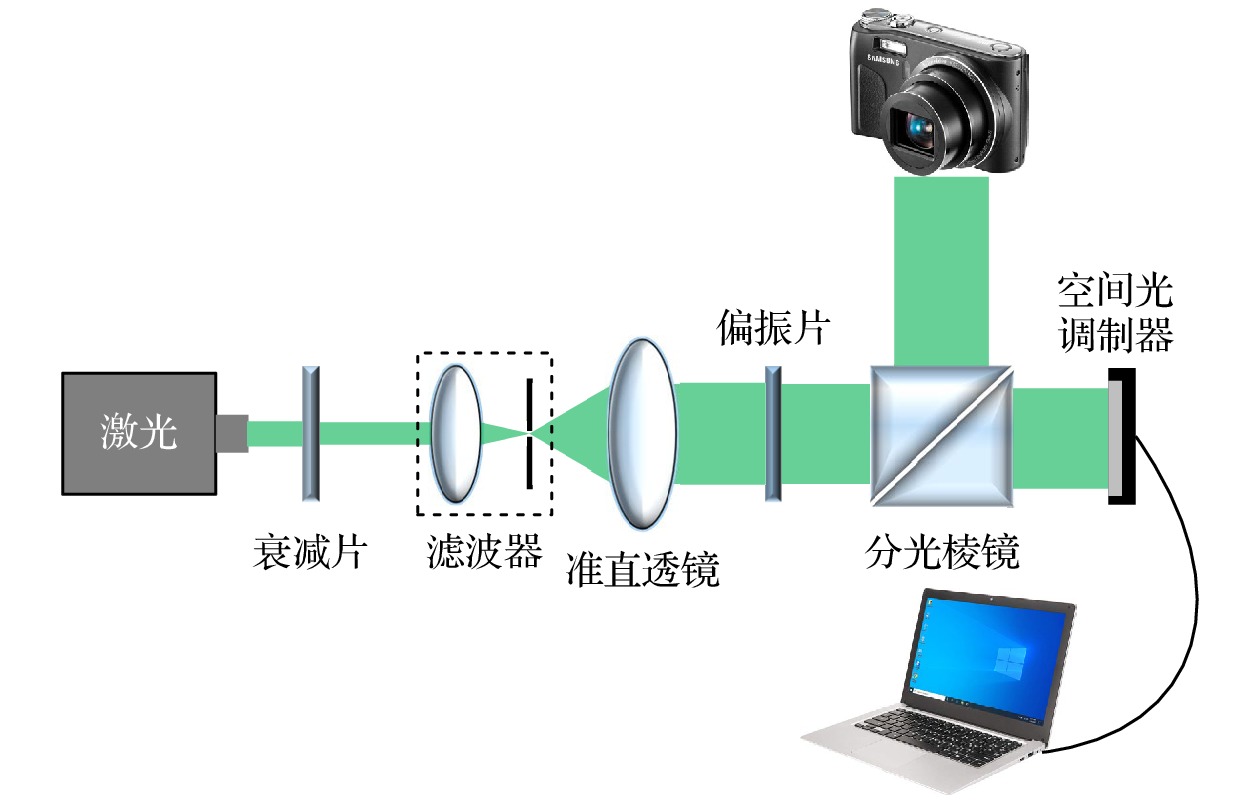

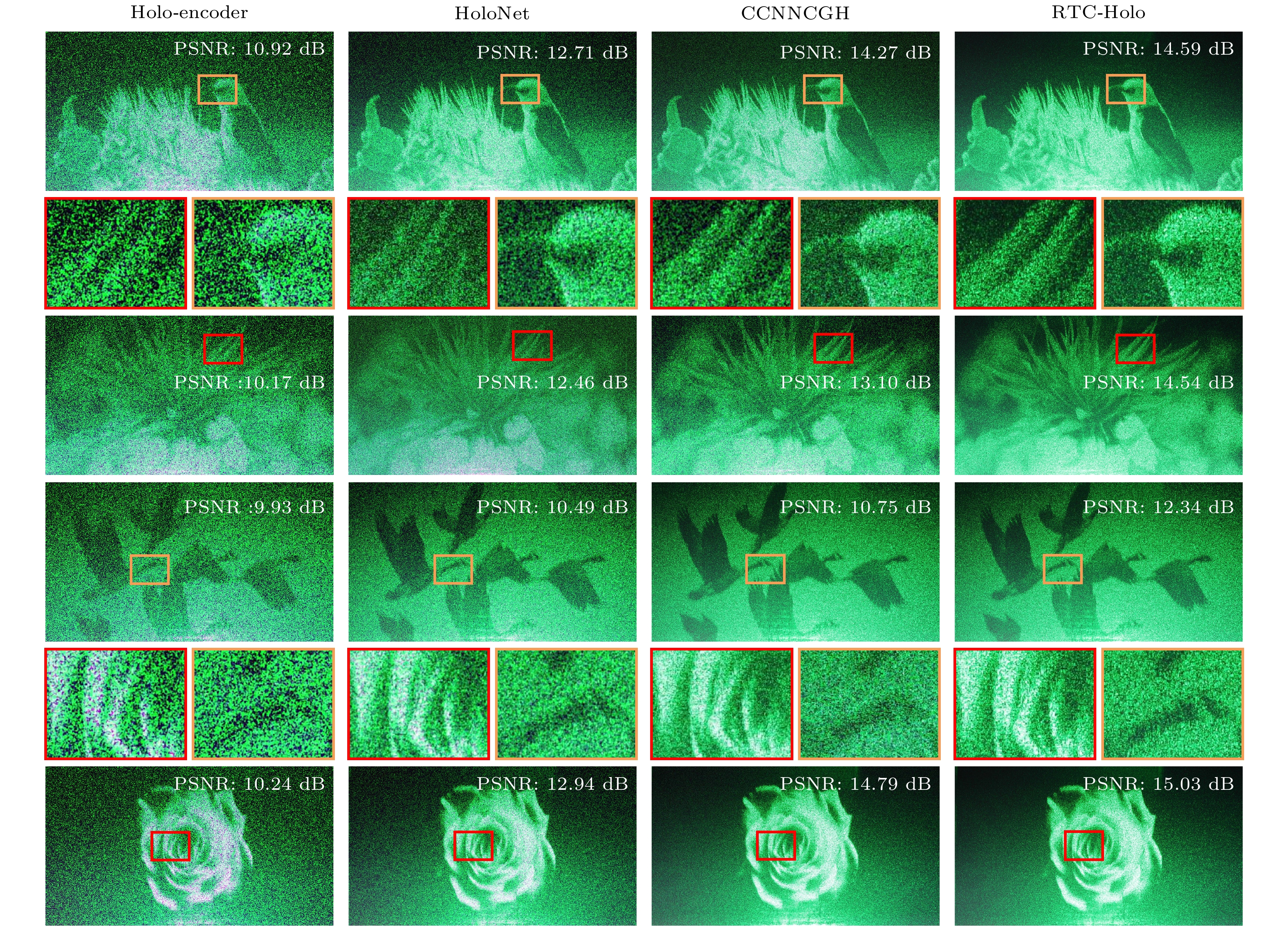

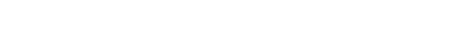

In recent years, with the significant improvement of computer performance, deep learning technology has shown an explosive development trend and has been widely used in various fields. In this context, the computer-generated hologram (CGH) generation algorithm based on deep learning provides a new method for displaying the real-time high-quality holograms. The convolutional neural network is a most typical network structure in deep learning algorithms, which can automatically extract key local features from an image and construct more complex global features through operations such as convolution, pooling and full connectivity. Convolutional neural networks have been widely used in the field of holographic displays due to their powerful feature extraction and generalization abilities. Compared with the traditional iterative algorithm, the CGH algorithm based on deep learning has a significantly improved computing speed, but its image quality still needs further improving. In this paper, an attention convolutional neural network based on the angular spectrum diffraction model is proposed to improve the quality as well as the speed of generating holograms. The whole network consists of real-valued and complex-valued convolutional neural networks: the real-valued network is used for phase prediction, while the complex-valued network is used to predict the complex amplitude of the SLM surface, and the phase of the complex amplitude obtained after prediction is used for holographic coding and numerical reconstruction. An attention mechanism is embedded in the down sampling stage of the phase prediction network to improve the feature extraction capability of the whole algorithm, thus improving the quality of the generated phase-only holograms. An accurate diffraction model of the angular spectrum method is embedded in the whole network to avoid labeling the large-scale datasets, and unsupervised learning is used to train the network. The proposed algorithm can generate high-quality 2K (resolution ratio of 11920×1072) holograms within 0.015 s. The average peak signal-to-noise ratio of the reconstruction images reaches up to 32.12 dB and the average structural similarity index measure of the generated holograms can achieve a value as high as 0.934. Numerical simulations and optical experiments verify the feasibility and effectiveness of the proposed attentional convolutional neural network algorithm based on the diffraction model of angular spectrum method, which provides a powerful help for applying the deep learning theory and algorithm to the field of real-time holographic display.

[1] Huang Q, Hou Y H, Lin F C, Li Z S, He M Y, Wang D, Wang Q H 2024 Opt. Lasers Eng. 176 108104

Google Scholar

Google Scholar

[2] Yao Y W, Zhang Y P, Fu Q Y, Duan J L, Zhang B, Cao L C, Poon T C 2024 Opt. Lett. 49 1481

Google Scholar

Google Scholar

[3] Chen C Y, Cheng C W, Chou T A, Chuang C H 2024 Opt. Commun. 550 130024

Google Scholar

Google Scholar

[4] Huang X M, Zhou Y L, Liang H W, Zhou J Y 2024 Opt. Lasers Eng. 176 108115

Google Scholar

Google Scholar

[5] Shigematsu O, Naruse M, Horisaki R 2024 Opt. Lett. 49 1876

Google Scholar

Google Scholar

[6] Gu T, Han C, Qin H F, Sun K S 2024 Opt. Express 32 44358

Google Scholar

Google Scholar

[7] Wang D, Li Z S, Zheng Y, Zhao Y R, Liu C, Xu J B, Zheng Y W, Huang Q, Chang C L, Zhang D W, Zhuang S L, Wang Q H 2024 Light: Sci. Appl. 13 62

Google Scholar

Google Scholar

[8] Wang Y Q, Zhang Z L, Zhao S Y, He W, Li X T, Wang X, Jie Y C, Zhao C M 2024 Opt. Laser Technol. 171 110372

Google Scholar

Google Scholar

[9] Yao Y W, Zhang Y P, Poon T C 2024 Opt. Lasers Eng. 175 108027

Google Scholar

Google Scholar

[10] Madali N, Gilles A, Gioia P, MORIN L 2024 Opt. Express 32 2473

Google Scholar

Google Scholar

[11] Tsai C M, Lu C N, Yu Y H, Han P, Fang Y C 2024 Opt. Lasers Eng. 174 107982.

Google Scholar

Google Scholar

[12] Zhao Y, Cao L C, Zhang H, Kong D Z, Jin G F 2015 Opt. Express 23 25440

Google Scholar

Google Scholar

[13] Gerhberg R W, Saxton W O 1972 Optik 35 237

[14] Liu K X, He Z H, Cao L C 2021 Chin. Opt. Lett. 19 050501

Google Scholar

Google Scholar

[15] Sui X M, He Z H, Jin G F, Cao L C 2022 Opt. Express 30 30552

Google Scholar

Google Scholar

[16] Kiriy S A, Rymov D A, Svistunov A S, Shifrina A V, Starikov R S, Cheremkhin P A 2024 Laser Phys. Lett. 21 045201

Google Scholar

Google Scholar

[17] Li X Y, Han C, Zhang C 2024 Opt. Commun. 557 130353

Google Scholar

Google Scholar

[18] Qin H F, Han C, Shi X, Gu T, Sun K S 2024 Opt. Express 32 44437

Google Scholar

Google Scholar

[19] Yan X P, Liu X L, Li J Q, Hu H R, Lin M, Wang X 2024 Opt. Laser Technol. 174 110667

Google Scholar

Google Scholar

[20] Yu G W, Wang J, Yang H, Guo Z C, Wu Y 2023 Opt. Lett. 48 5351

Google Scholar

Google Scholar

[21] Liu Q W, Chen J, Qiu B S, Wang Y T, Liu J 2023 Opt. Express 31 35908

Google Scholar

Google Scholar

[22] Horisaki R, Takagi R, Tanida J 2018 Appl. Opt. 57 3859

Google Scholar

Google Scholar

[23] Lee J, Jeong J, Cho J, Yoo D, Lee B, Lee B 2020 Opt. Express 28 27137

Google Scholar

Google Scholar

[24] Chang C L, Wang D, Zhu D C, Li J M, Xia J, Zhang X L 2022 Opt. Lett. 47 1482

Google Scholar

Google Scholar

[25] Wu J C, Liu K X, Sui X M, Cao L C 2021 Opt. Let. 46 2908

Google Scholar

Google Scholar

[26] Shui X H, Zheng H D, Xia X X, Yang F R, Wang W S, Yu Y J 2022 Opt. Express 30 44814

Google Scholar

Google Scholar

[27] Peng Y F, Choi S, Padmanaban N, Wetzstein G 2020 ACM Trans. Graphics 39 1

[28] Zhong C L, Sang X Z, Yan B B, Li H, Chen D, Qin X J, Chen S, Ye X Q 2023 IEEE Trans. Visual Comput. Graphics 30 1

-

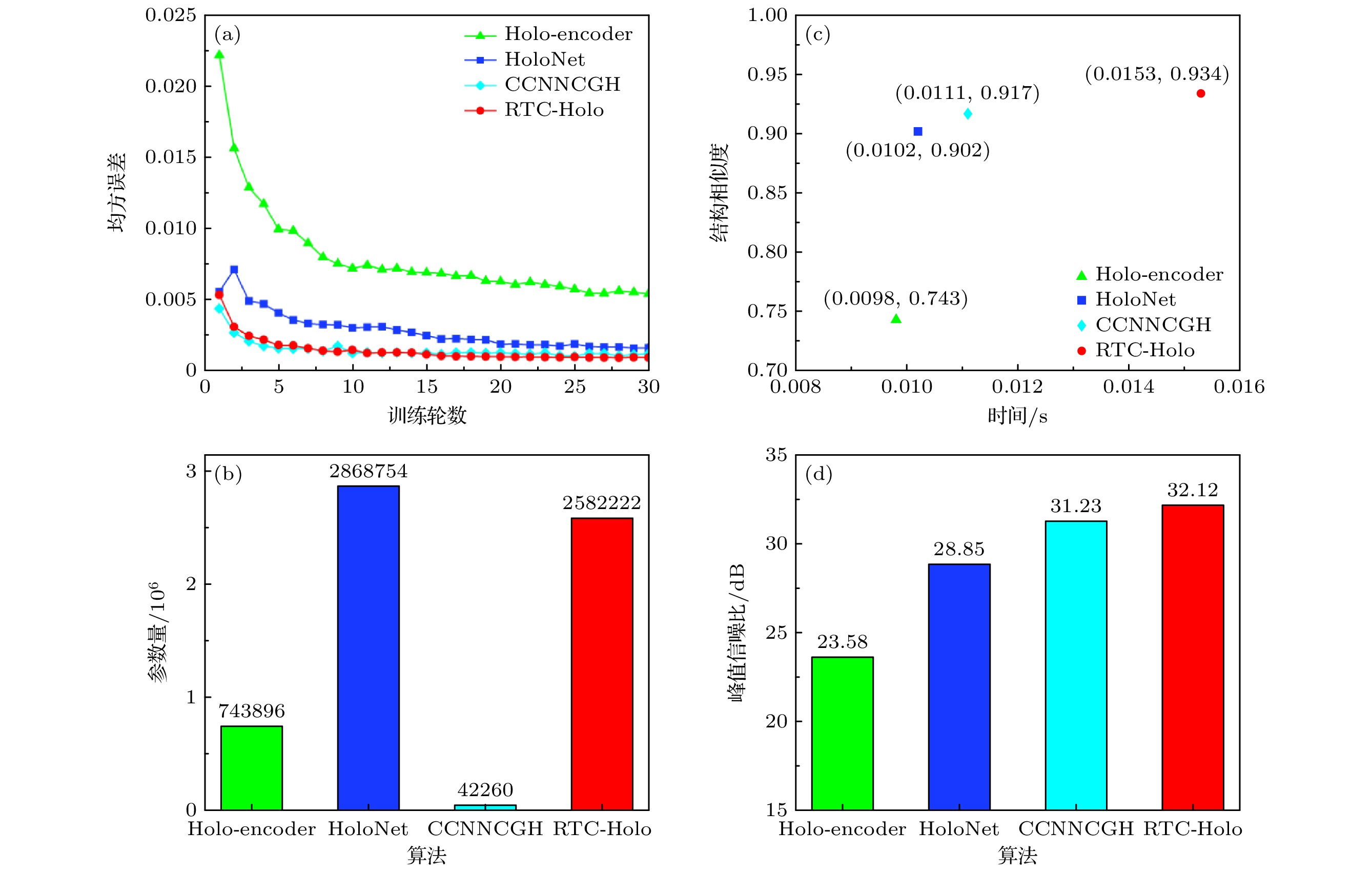

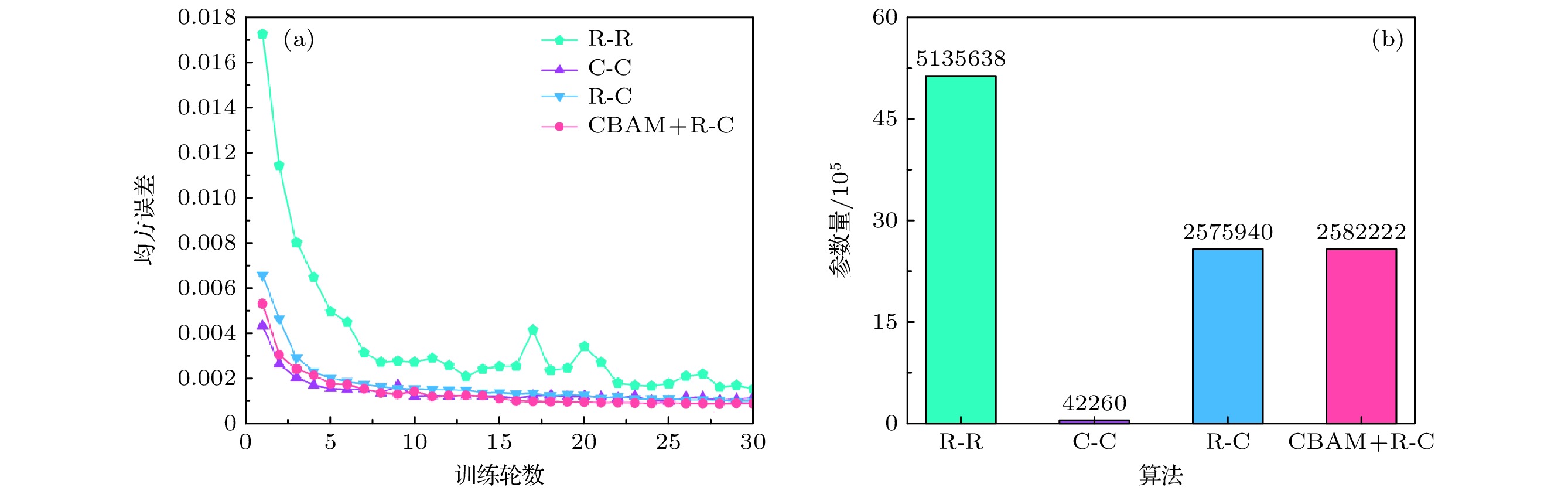

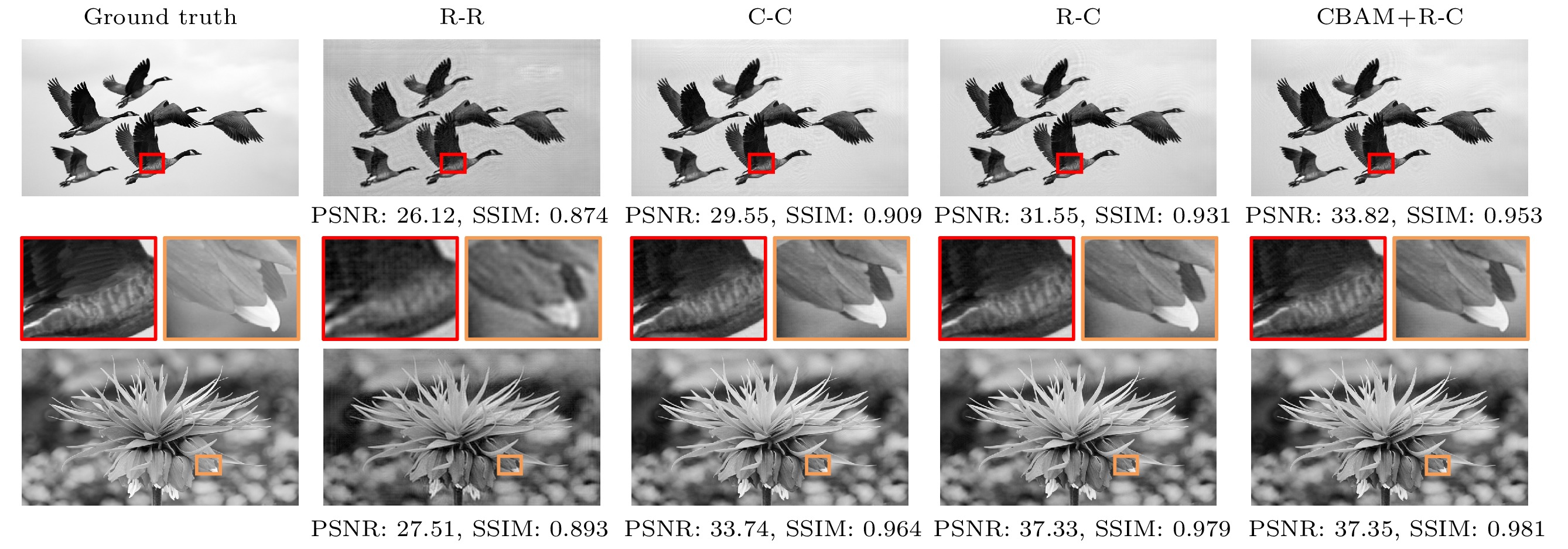

图 4 算法数值仿真对比 (a)算法30轮训练的损失函数对比折线图; (b)算法参数量对比柱状图; (c)算法SSIM-Time对比散点图; (d)算法PSNR对比柱状图

Figure 4. Algorithm numerical simulation comparison: (a) Line graph of loss function comparison for 30 training rounds of the algorithm ; (b) histogram of algorithm parameter count comparison; (c) scatter plot of algorithm SSIM-Time comparison; (d) histogram of algorithm PSNR comparison.

表 1 网络结构数值仿真对比

Table 1. Comparison of numerical simulation of network structures.

网络结构 R-R C-C R-C CBAM+R-C PSNR/dB 27.89 31.23 31.59 32.12 SSIM 0.891 0.917 0.925 0.934 -

[1] Huang Q, Hou Y H, Lin F C, Li Z S, He M Y, Wang D, Wang Q H 2024 Opt. Lasers Eng. 176 108104

Google Scholar

Google Scholar

[2] Yao Y W, Zhang Y P, Fu Q Y, Duan J L, Zhang B, Cao L C, Poon T C 2024 Opt. Lett. 49 1481

Google Scholar

Google Scholar

[3] Chen C Y, Cheng C W, Chou T A, Chuang C H 2024 Opt. Commun. 550 130024

Google Scholar

Google Scholar

[4] Huang X M, Zhou Y L, Liang H W, Zhou J Y 2024 Opt. Lasers Eng. 176 108115

Google Scholar

Google Scholar

[5] Shigematsu O, Naruse M, Horisaki R 2024 Opt. Lett. 49 1876

Google Scholar

Google Scholar

[6] Gu T, Han C, Qin H F, Sun K S 2024 Opt. Express 32 44358

Google Scholar

Google Scholar

[7] Wang D, Li Z S, Zheng Y, Zhao Y R, Liu C, Xu J B, Zheng Y W, Huang Q, Chang C L, Zhang D W, Zhuang S L, Wang Q H 2024 Light: Sci. Appl. 13 62

Google Scholar

Google Scholar

[8] Wang Y Q, Zhang Z L, Zhao S Y, He W, Li X T, Wang X, Jie Y C, Zhao C M 2024 Opt. Laser Technol. 171 110372

Google Scholar

Google Scholar

[9] Yao Y W, Zhang Y P, Poon T C 2024 Opt. Lasers Eng. 175 108027

Google Scholar

Google Scholar

[10] Madali N, Gilles A, Gioia P, MORIN L 2024 Opt. Express 32 2473

Google Scholar

Google Scholar

[11] Tsai C M, Lu C N, Yu Y H, Han P, Fang Y C 2024 Opt. Lasers Eng. 174 107982.

Google Scholar

Google Scholar

[12] Zhao Y, Cao L C, Zhang H, Kong D Z, Jin G F 2015 Opt. Express 23 25440

Google Scholar

Google Scholar

[13] Gerhberg R W, Saxton W O 1972 Optik 35 237

[14] Liu K X, He Z H, Cao L C 2021 Chin. Opt. Lett. 19 050501

Google Scholar

Google Scholar

[15] Sui X M, He Z H, Jin G F, Cao L C 2022 Opt. Express 30 30552

Google Scholar

Google Scholar

[16] Kiriy S A, Rymov D A, Svistunov A S, Shifrina A V, Starikov R S, Cheremkhin P A 2024 Laser Phys. Lett. 21 045201

Google Scholar

Google Scholar

[17] Li X Y, Han C, Zhang C 2024 Opt. Commun. 557 130353

Google Scholar

Google Scholar

[18] Qin H F, Han C, Shi X, Gu T, Sun K S 2024 Opt. Express 32 44437

Google Scholar

Google Scholar

[19] Yan X P, Liu X L, Li J Q, Hu H R, Lin M, Wang X 2024 Opt. Laser Technol. 174 110667

Google Scholar

Google Scholar

[20] Yu G W, Wang J, Yang H, Guo Z C, Wu Y 2023 Opt. Lett. 48 5351

Google Scholar

Google Scholar

[21] Liu Q W, Chen J, Qiu B S, Wang Y T, Liu J 2023 Opt. Express 31 35908

Google Scholar

Google Scholar

[22] Horisaki R, Takagi R, Tanida J 2018 Appl. Opt. 57 3859

Google Scholar

Google Scholar

[23] Lee J, Jeong J, Cho J, Yoo D, Lee B, Lee B 2020 Opt. Express 28 27137

Google Scholar

Google Scholar

[24] Chang C L, Wang D, Zhu D C, Li J M, Xia J, Zhang X L 2022 Opt. Lett. 47 1482

Google Scholar

Google Scholar

[25] Wu J C, Liu K X, Sui X M, Cao L C 2021 Opt. Let. 46 2908

Google Scholar

Google Scholar

[26] Shui X H, Zheng H D, Xia X X, Yang F R, Wang W S, Yu Y J 2022 Opt. Express 30 44814

Google Scholar

Google Scholar

[27] Peng Y F, Choi S, Padmanaban N, Wetzstein G 2020 ACM Trans. Graphics 39 1

[28] Zhong C L, Sang X Z, Yan B B, Li H, Chen D, Qin X J, Chen S, Ye X Q 2023 IEEE Trans. Visual Comput. Graphics 30 1

Catalog

Metrics

- Abstract views: 2966

- PDF Downloads: 98

- Cited By: 0

DownLoad:

DownLoad: