-

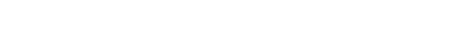

Magnetic resonance imaging (MRI) method based on deep learning needs large-quantity and high-quality patient-based datasets for pre-training. However, this is a challenge to the clinical applications because it is difficult to obtain a sufficient quantity of patient-based MR datasets due to the limitation of equipment and patient privacy concerns. In this paper, we propose a novel undersampled MRI reconstruction method based on deep learning. This method does not require any pre-training procedures and does not depend on training datasets. The proposed method is inspired by the traditional deep image prior (DIP) framework, and integrates the structure prior and support prior of the target MR image to improve the efficiency of learning. Based on the similarity between the reference image and the target image, the high-resolution reference image obtained in advance is used as the network input, thereby incorporating the structural prior information into network. By taking the coefficient index set of the reference image with large amplitude in the wavelet domain as the known support of the target image, the regularization constraint term is constructed, and the network training is transformed into the optimization process of network parameters. Experimental results show that the proposed method can obtain more accurate reconstructions from undersampled k-space data, and has obvious advantages in preserving tissue features and detailed texture.

-

Keywords:

- magnetic resonance imaging /

- undersampled image reconstruction /

- deep image prior /

- support prior

[1] Davenport M A, Duarte M F, Eldar Y C, et al. 2012 Compressed Sensing: Theory and Applications (Cambridge: Cambridge University Press) pp1–64

[2] Lustig M, Donoho D L, Pauly J M 2007 Magn. Reson. Med. 58 1182

Google Scholar

Google Scholar

[3] Qu X B, Guo D, Ning B D, Hou Y K, et al. 2012 Magn. Reson. Imaging 30 964

Google Scholar

Google Scholar

[4] Liu J B, Wang S S, Peng X, Liang D 2015 Comput. Math. Methods Med. 2015 1

[5] Zhan Z F, Cai J F, Guo D, Liu Y S, Chen Z, Qu X B 2016 IEEE Trans. Biomed. Eng. 63 1850

Google Scholar

Google Scholar

[6] Liu Q G, Wang S S, Ying L, Peng X, et al. 2013 IEEE Trans. Image Process. 22 4652

Google Scholar

Google Scholar

[7] Du H Q, Lam F 2012 Magn. Reson. Imaging 30 954

Google Scholar

Google Scholar

[8] Peng X, Du H Q, Lam F, Babacan D, Liang Z P 2011 In: Proceedings of IEEE International Symposium on Biomedical Imaging Chicago, USA, March 30, 2011 p89

[9] Manduca A, Trzasko J D, Li Z B. 2010 In: Proceedings of SPIE, The International Society for Optical Engineering (California-2010.2.13) p762223

[10] Han Y, Du H Q, Gao X Z, Mei W B 2017 IET Image Proc. 11 155

Google Scholar

Google Scholar

[11] Stojnic M, Parvaresh F, Hassibi B 2009 IEEE Trans. Signal Process. 57 3075

Google Scholar

Google Scholar

[12] Usman M, Prieto C, Schaeffter T, et al. 2011 Magn. Reson. Med. 66 1163

Google Scholar

Google Scholar

[13] Blumensath T 2011 IEEE Trans. Inf. Theory 57 4660

Google Scholar

Google Scholar

[14] Litjens G, Kooi T, Bejnordi B E, Setio A A A, Ciompi F, Ghafoorian M, van der Laak J A W M, van Ginneken B, Sánchez C I 2017 Med. Image Anal. 42 60

Google Scholar

Google Scholar

[15] Wang S S, Xiao T H, Liu Q G, Zheng H R 2021 Biomed. Signal Process. Control 68 102579

Google Scholar

Google Scholar

[16] Liang D, Cheng J, Ke Z W, Ying L 2020 IEEE Signal Processing Mag. 37 141

Google Scholar

Google Scholar

[17] Schlemper J, Caballero J, Hajnal J V, Price A N, Rueckert D 2018 IEEE Trans. Med. Imaging 37 491

Google Scholar

Google Scholar

[18] Yang G, Yu S, Dong H, Slabaugh G, Dragotti P L, Ye X J, Liu F D, Arridge S, Keegan J, Guo Y K, Firmin D 2018 IEEE Trans. Med. Imaging 37 1310

Google Scholar

Google Scholar

[19] Wang S S, Su Z H, Ying L, Peng X, Zhu S, Liang F, Feng D G, Liang D 2016 In: IEEE 13th International Symposium on Biomedical Imaging Prague, Czech Republic, April 01, 2016 p514

[20] Akcakaya M, Moeller S, Weingartner S, Ugurbil K 2019 Magn. Reson. Med. 81 439

Google Scholar

Google Scholar

[21] Aggarwal H K, Mani M P, Jacob M 2019 IEEE Trans. Med. Imaging 38 394

Google Scholar

Google Scholar

[22] Yang Y, Sun J, Li H B, Xu Z B 2016 In: Advances in Neural Information Processing Systems Barcelona, Spain, December 05, 2016 p10

[23] Qin C, Schlemper J, Caballero J, Price A N, Hajnal J V, Rueckert D 2019 IEEE Trans. Med. Imaging 38 280

Google Scholar

Google Scholar

[24] Ulyanov D, Vedaldi A, Lempitsky V 2018 In: IEEE/CVF Conference on Computer Vision and Pattern Recognition Salt Lake City, USA, June 18, 2018 p9446

[25] Gong K, Catana C, Qi J Y, Li Q Z 2019 IEEE Trans. Med. Imaging 38 1655

Google Scholar

Google Scholar

[26] Gary M, Michael E, Peyman M 2019 arXiv: 1903.10176

[27] Sagel A, Roumy A, Guillemot C 2020 In: IEEE International Conference on Acoustics, Speech and Signal Processing Barcelona, Spain, May 04, 2020 p2513

[28] Liu J M, Sun Y, Xu X J, Kamilov U S 2019 In: IEEE International Conference on Acoustics, Speech and Signal Processing Brighton, Britain, May 12, 2019 p7715

[29] Hashimoto F, Ohba H, Ote K, Teramoto A, Sukada H 2019 IEEE Access 7 96594

Google Scholar

Google Scholar

[30] Dave van Veen, Ajil J, Mahdi S, Eric P, Sriram V, Alexandros G D 2018 arXiv: 1806.06438

[31] Daniel O B, Johannes L, Maximilian S 2020 Inverse Problems 36 094004

Google Scholar

Google Scholar

[32] Yoo J, Jin K H, Gupta H, Yerly J, Stuber M, Unser M 2021 IEEE Trans. Med. Imaging (Early Access)

[33] Vaswani N, Lu W 2010 IEEE Trans. Signal Process. 58 4595

Google Scholar

Google Scholar

[34] Wang Z, Bovik A C, Sheikh H R, Simoncelli E P 2004 IEEE Trans. Image Process. 13 600

Google Scholar

Google Scholar

-

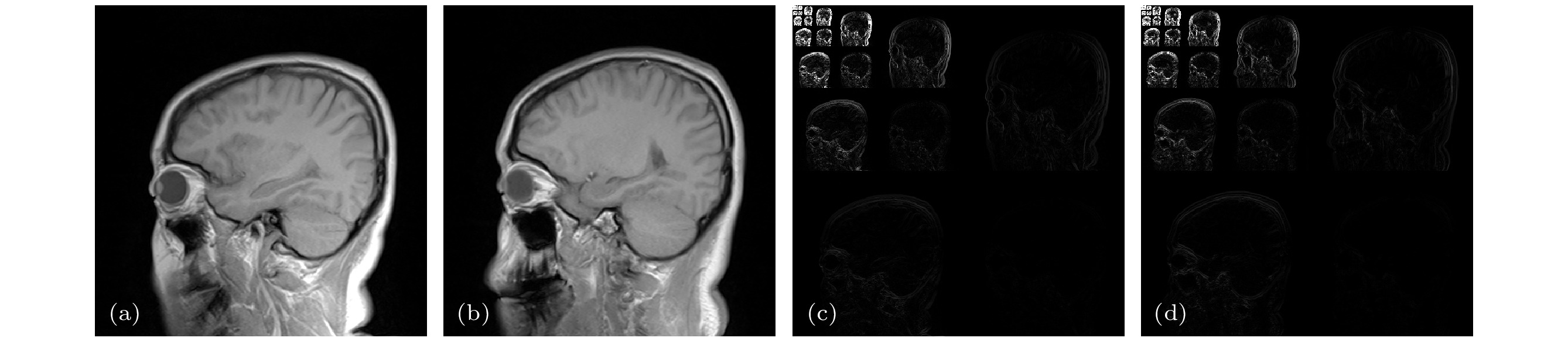

图 2 参考图像和目标图像的结构相似性及小波域支撑分布 (a), (b)同一病人的脑部扫描MR图像; (c), (d)对应的小波系数分布(Haar小波, 9层小波分解)

Figure 2. Structural similarity between the reference and target images and support distributions in the wavelet domain: (a), (b) Brain MR images from the same patient; (c), (d) corresponding wavelet coefficient distributions (Using Haar wavelet at level 9).

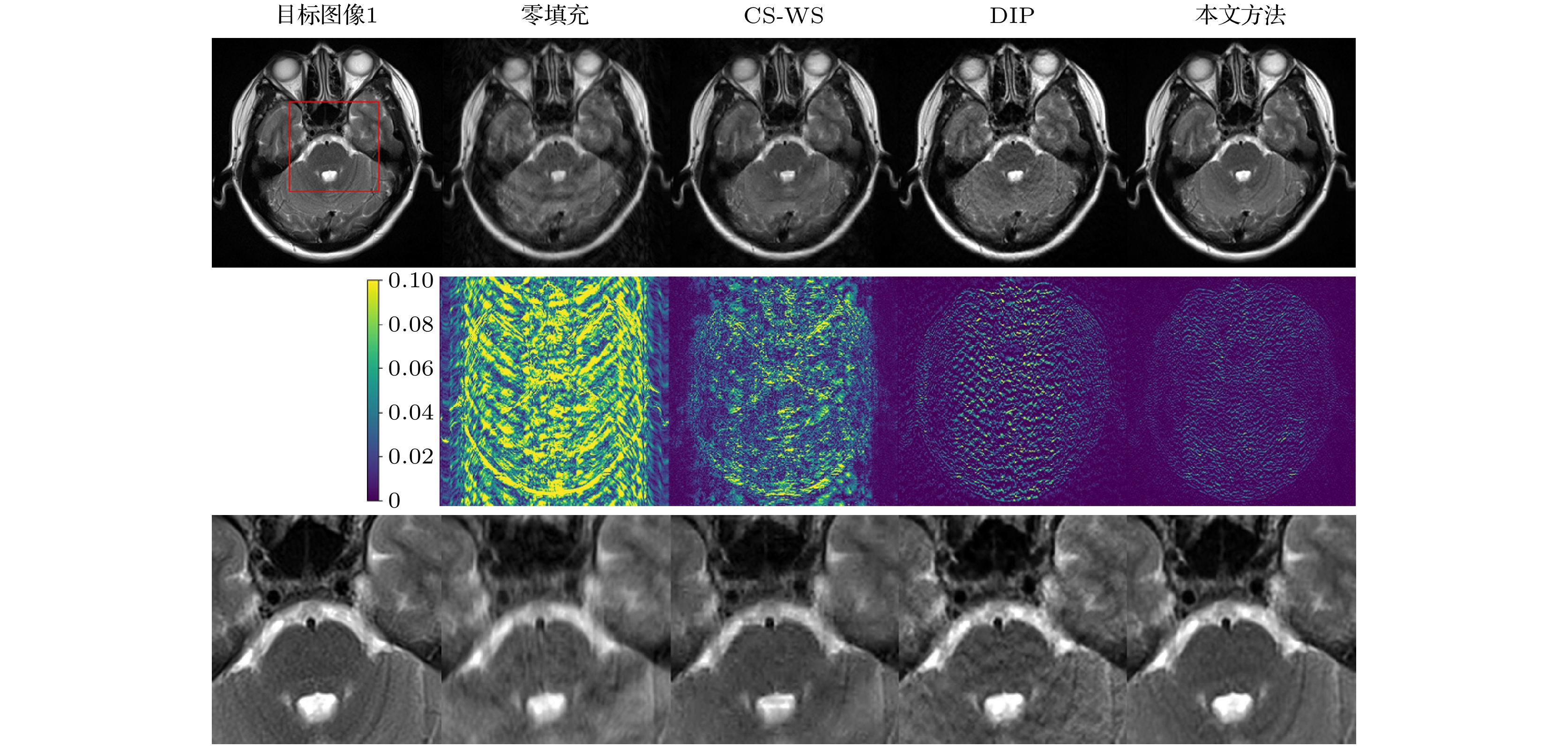

图 5 笛卡尔采样模板40%采样率下目标图像1的重建结果对比. 第一行为目标图像1与各方法重建结果, 第二行为对应的误差图像, 第三行为对应的局部放大图

Figure 5. Comparison of reconstructions of target image 1 using Cartesian undersampled mask with 40% sampling rate: Target image 1 and reconstruction results (1st row), the corresponding error images (2nd row), and the corresponding zoom-in images (3rd row)

图 6 笛卡尔采样模板20%采样率下目标图像2的重建结果对比. 第一行为目标图像2与各方法重建结果, 第二行为对应的误差图像, 第三行为对应的局部放大图

Figure 6. Comparison of reconstructions of target image 2 using Cartesian undersampled mask with 20% sampling rate: Target image 2 and reconstruction results (1st row), the corresponding error images (2nd row), and the corresponding zoom-in images (3rd row)

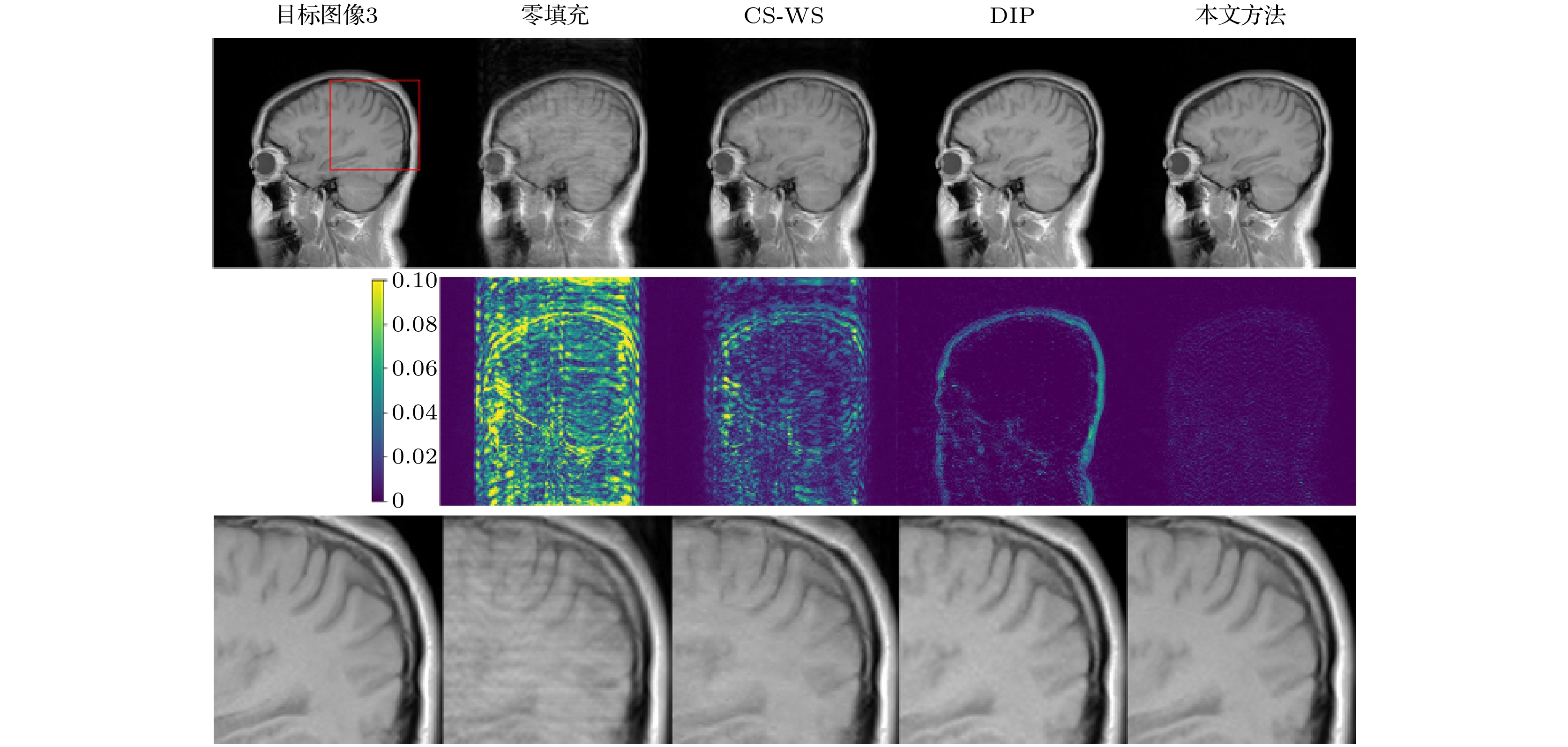

图 7 笛卡尔采样模板30%采样率下目标图像3的重建结果对比. 第一行为目标图像3与各方法重建结果, 第二行为对应的误差图像, 第三行为对应的局部放大图

Figure 7. Comparison of reconstructions of target image 3 using Cartesian undersampled mask with 30% sampling rate: Target image 3 and reconstruction results (1st row), the corresponding error images (2nd row), and the corresponding zoom-in images (3rd row)

图 8 变密度采样模板30%采样率下目标图像1的重建结果对比. 第一行为目标图像1与各方法重建结果, 第二行为对应的误差图像, 第三行为对应的局部放大图

Figure 8. Comparison of reconstructions of target image 1 using variable density undersampled mask with 30% sampling rate: Target image 1 and reconstruction results (1st row), the corresponding error images (2nd row), and the corresponding zoom-in images (3rd row).

图 9 径向采样模板10%采样率下目标图像3的重建结果对比. 第一行为目标图像3与各方法重建结果, 第二行为对应的误差图像, 第三行为对应的局部放大图

Figure 9. Comparison of reconstructions of target image 3 using radial undersampled mask with 10% sampling rate: Target image 3 and reconstruction results (1st row), the corresponding error images (2nd row), and the corresponding zoom-in images (3rd row).

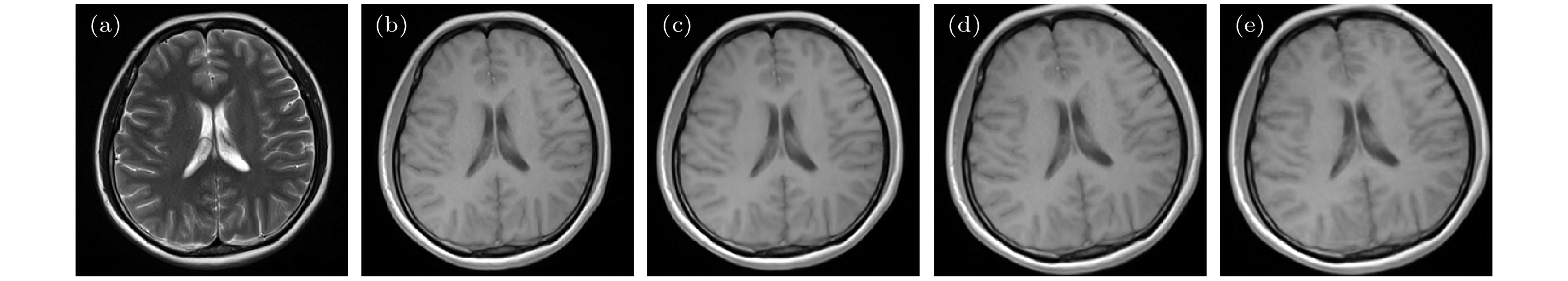

图 11 参考图像与目标图像间存在对比度差异及运动偏移情况下本文方法的重建结果 (a)参考图像; (b)待重建目标图像; (c)本文方法对(b)的重建结果; (d)待重建目标图像(存在运动偏移); (e)本文方法对(d)的重建结果; (a)—(c) 相对误差为2.19%, PSNR = 40.8788 dB, SSIM = 0.9937; (d), (e) 相对误差为2.54%, PSNR = 39.0825 dB, SSIM = 0.9937

Figure 11. Reconstructions of the proposed method when there is contrast difference and motion between the reference image and the target image: (a) Reference image; (b) target image to be reconstructed; (c) reconstruction of (b) by the proposed method; (d) target image to be reconstructed(with motion effects); (e) reconstruction of (d) by the proposed method; (a)—(c) the relative error is 2.19%, PSNR = 40.8788 dB, SSIM = 0.9937; (d), (e) relative error 2.54%, PSNR = 39.0825 dB, SSIM = 0.9937.

表 1 实验参数设置

Table 1. Parameter setting for experiments

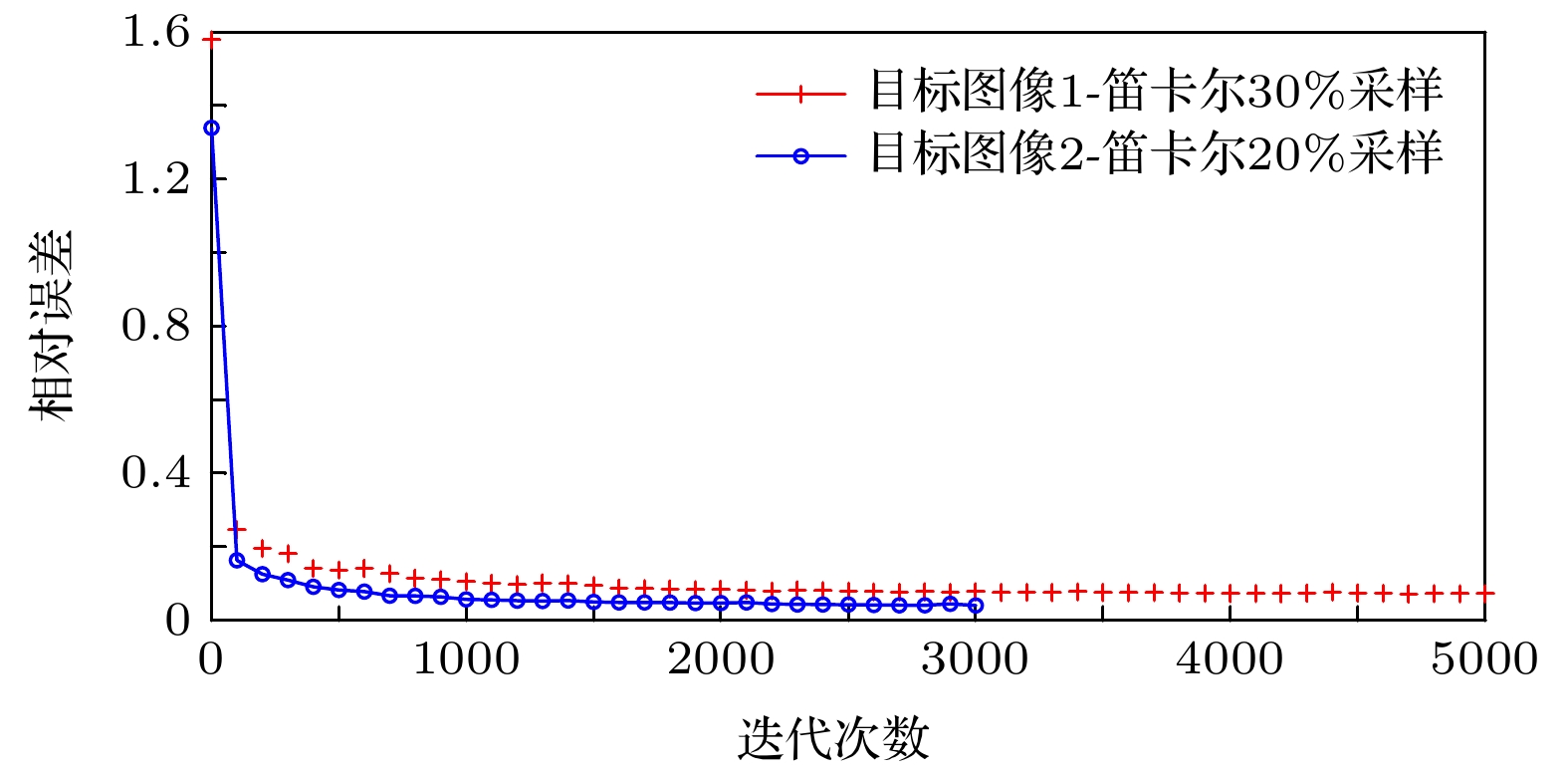

参数 图像 目标图像1 目标图像2 目标图像3 网络超参数 学习率 0.005 0.003 0.003 L 6 6 6 $n_{\rm{d}}$ [32, 64, 64, 64, 128, 128] [16, 32, 64, 64, 128, 128] [16, 32, 64, 64, 128, 128] $n_{\rm{u}}$ [32, 64, 64, 64, 128, 128] [16, 32, 64, 64, 128, 128] [16, 32, 64, 64, 128, 128] $n_{\rm{s}}$ [16, 16, 16, 16, 16, 16] [16, 16, 16, 16, 16, 16] [16, 16, 16, 16, 16, 16] $k_{\rm{d}}$ [3, 3, 3, 3, 3, 3] [3, 3, 3, 3, 3, 3] [3, 3, 3, 3, 3, 3] $k_{\rm{u}}$ [3, 3, 3, 3, 3, 3] [3, 3, 3, 3, 3, 3] [3, 3, 3, 3, 3, 3] $k_{\rm{s}}$ [1, 1, 1, 1, 1, 1] [1, 1, 1, 1, 1, 1] [1, 1, 1, 1, 1, 1] 迭代次数 5000 3000 3000 小波参数 小波函数 Haar Haar Haar 分解层数 7 8 8 P 13500 55000 52000 λ 1 × 10–10 1 × 10–6 1 × 10–9 表 2 笛卡尔采样模板下不同重建方法的相对误差、PSNR及SSIM

Table 2. Relative errors, PSNR and SSIM values of reconstruction by different methods under Cartesian undersampled mask

待重建MR图像 重建方法 10% 20% 相对误差/% PSNR/dB SSIM 相对误差/% PSNR/dB SSIM 目标图像1 零填充 35.64 18.7365 0.5543 16.14 25.6185 0.7135 CS-WS 34.53 19.0101 0.5590 13.74 27.0139 0.7998 DIP 32.51 19.5344 0.6666 12.49 27.8440 0.8989 本文方法 19.89 23.8004 0.8172 9.43 30.2852 0.9793 目标图像2 零填充 19.66 22.5625 0.7550 13.36 25.9204 0.8065 CS-WS 17.14 23.7530 0.7654 10.34 28.1426 0.8507 DIP 15.09 24.9087 0.8473 5.41 33.7814 0.9619 本文方法 7.51 30.9288 0.9454 3.48 37.5915 0.9788 目标图像3 零填充 17.64 23.5716 0.7857 12.76 26.3807 0.8266 CS-WS 15.24 24.8395 0.8139 9.87 28.6144 0.8774 DIP 14.90 25.0660 0.8585 5.43 33.8264 0.9607 本文方法 7.31 31.2228 0.9491 3.53 37.5488 0.9806 待重建MR图像 重建方法 30% 40% 相对误差/% PSNR/dB SSIM 相对误差/% PSNR/dB SSIM 目标图像1 零填充 11.53 28.5361 0.7657 7.71 32.0337 0.8087 CS-WS 9.40 30.3126 0.8689 6.68 33.2736 0.9136 DIP 9.05 30.6386 0.9342 6.98 32.8933 0.9605 本文方法 7.06 32.7970 0.9590 5.64 34.7395 0.9728 目标图像2 零填充 4.61 35.1677 0.8677 3.46 37.6630 0.8830 CS-WS 2.61 40.0883 0.9377 1.95 42.6338 0.9438 DIP 2.80 39.4992 0.9858 2.33 41.1008 0.9892 本文方法 2.08 42.0469 0.9905 1.73 43.6437 0.9931 目标图像3 零填充 4.31 35.8182 0.8813 3.24 38.2961 0.8997 CS-WS 2.45 40.7193 0.9444 1.84 43.1958 0.9601 DIP 3.22 38.5632 0.9824 2.54 40.4324 0.9885 本文方法 2.05 42.2484 0.9908 1.64 44.2051 0.9939 表 3 径向采样模板及变密度采样模板下不同重建方法的相对误差、PSNR及SSIM

Table 3. Relative errors, PSNR and SSIM values of reconstruction by different methods under radial undersampled mask and variable density undersampled mask

待重建MR图像 采样模板(采样率) 重建方法 相对误差/% PSNR/dB SSIM 目标图像1 径向(20%) 零填充 14.34 26.6426 0.7852 CS-WS 12.11 28.1129 0.8519 DIP 10.95 28.9845 0.9169 本文方法 7.92 31.7960 0.9547 变密度(30%) 零填充 15.03 26.2373 0.7735 CS-WS 11.15 28.8264 0.8662 DIP 9.26 30.4441 0.9321 本文方法 6.43 33.6199 0.9648 目标图像2 径向(10%) 零填充 8.05 30.3202 0.7960 CS-WS 5.73 33.2803 0.9010 DIP 4.40 35.5814 0.9662 本文方法 3.54 37.4434 0.9760 变密度(20%) 零填充 6.47 32.2213 0.8778 CS-WS 3.56 37.4195 0.9625 DIP 3.15 38.4764 0.9821 本文方法 2.57 40.2243 0.9850 目标图像3 径向(10%) 零填充 7.03 31.5576 0.8177 CS-WS 5.18 34.2111 0.9158 DIP 4.73 35.0303 0.9629 本文方法 3.55 37.4852 0.9751 变密度(20%) 零填充 5.82 33.2106 0.8978 CS-WS 3.27 38.2161 0.9675 DIP 3.01 38.9786 0.9819 本文方法 2.54 40.4229 0.9852 表 4 径向采样模板及变密度采样模板下不同重建方法的相对误差、PSNR及SSIM

Table 4. Relative errors, PSNR and SSIM values of reconstructions by different methods under radial undersampled mask and variable density undersampled mask

采样模板(采样率) 重建方法 相对误差/% PSNR/dB SSIM 径向 (20%) DIP + Ref 8.88 30.9180 0.9478 DIP + Sup 10.35 29.4739 0.9264 DIP + Ref + Sup 8.24 31.5530 0.9512 DIP + Ref + Sup + Cor 7.92 31.7960 0.9547 变密度 (30%) DIP + Ref 7.38 32.4116 0.9583 DIP + Sup 9.08 30.6104 0.9583 DIP + Ref + Sup 6.71 33.2360 0.9620 DIP + Ref + Sup + Cor 6.43 33.6199 0.9648 表 5 笛卡尔采样下不同重建方法的计算时间

Table 5. Computational time for different reconstruction methods under the Cartesian mask

待重建MR图像 重建方法 计算时间 10% 20% 30% 40% 目标图像1 CS-WS 46 s 46 s 49 s 46 s DIP 2 min 53 s 2 min 43 s 2 min 47 s 2 min 45 s 本文方法 3 min 55 s 3 min 56 s 3 min 54 s 3 min 56 s 图11(d)所示目标图像 CS-WS 42 s 42 s 45 s 44 s DIP 2 min 33 s 2 min 35 s 2 min 35 s 2 min 34 s 本文方法 3 min 14 s 3 min 15 s 3 min 15 s 3 min 13 s -

[1] Davenport M A, Duarte M F, Eldar Y C, et al. 2012 Compressed Sensing: Theory and Applications (Cambridge: Cambridge University Press) pp1–64

[2] Lustig M, Donoho D L, Pauly J M 2007 Magn. Reson. Med. 58 1182

Google Scholar

Google Scholar

[3] Qu X B, Guo D, Ning B D, Hou Y K, et al. 2012 Magn. Reson. Imaging 30 964

Google Scholar

Google Scholar

[4] Liu J B, Wang S S, Peng X, Liang D 2015 Comput. Math. Methods Med. 2015 1

[5] Zhan Z F, Cai J F, Guo D, Liu Y S, Chen Z, Qu X B 2016 IEEE Trans. Biomed. Eng. 63 1850

Google Scholar

Google Scholar

[6] Liu Q G, Wang S S, Ying L, Peng X, et al. 2013 IEEE Trans. Image Process. 22 4652

Google Scholar

Google Scholar

[7] Du H Q, Lam F 2012 Magn. Reson. Imaging 30 954

Google Scholar

Google Scholar

[8] Peng X, Du H Q, Lam F, Babacan D, Liang Z P 2011 In: Proceedings of IEEE International Symposium on Biomedical Imaging Chicago, USA, March 30, 2011 p89

[9] Manduca A, Trzasko J D, Li Z B. 2010 In: Proceedings of SPIE, The International Society for Optical Engineering (California-2010.2.13) p762223

[10] Han Y, Du H Q, Gao X Z, Mei W B 2017 IET Image Proc. 11 155

Google Scholar

Google Scholar

[11] Stojnic M, Parvaresh F, Hassibi B 2009 IEEE Trans. Signal Process. 57 3075

Google Scholar

Google Scholar

[12] Usman M, Prieto C, Schaeffter T, et al. 2011 Magn. Reson. Med. 66 1163

Google Scholar

Google Scholar

[13] Blumensath T 2011 IEEE Trans. Inf. Theory 57 4660

Google Scholar

Google Scholar

[14] Litjens G, Kooi T, Bejnordi B E, Setio A A A, Ciompi F, Ghafoorian M, van der Laak J A W M, van Ginneken B, Sánchez C I 2017 Med. Image Anal. 42 60

Google Scholar

Google Scholar

[15] Wang S S, Xiao T H, Liu Q G, Zheng H R 2021 Biomed. Signal Process. Control 68 102579

Google Scholar

Google Scholar

[16] Liang D, Cheng J, Ke Z W, Ying L 2020 IEEE Signal Processing Mag. 37 141

Google Scholar

Google Scholar

[17] Schlemper J, Caballero J, Hajnal J V, Price A N, Rueckert D 2018 IEEE Trans. Med. Imaging 37 491

Google Scholar

Google Scholar

[18] Yang G, Yu S, Dong H, Slabaugh G, Dragotti P L, Ye X J, Liu F D, Arridge S, Keegan J, Guo Y K, Firmin D 2018 IEEE Trans. Med. Imaging 37 1310

Google Scholar

Google Scholar

[19] Wang S S, Su Z H, Ying L, Peng X, Zhu S, Liang F, Feng D G, Liang D 2016 In: IEEE 13th International Symposium on Biomedical Imaging Prague, Czech Republic, April 01, 2016 p514

[20] Akcakaya M, Moeller S, Weingartner S, Ugurbil K 2019 Magn. Reson. Med. 81 439

Google Scholar

Google Scholar

[21] Aggarwal H K, Mani M P, Jacob M 2019 IEEE Trans. Med. Imaging 38 394

Google Scholar

Google Scholar

[22] Yang Y, Sun J, Li H B, Xu Z B 2016 In: Advances in Neural Information Processing Systems Barcelona, Spain, December 05, 2016 p10

[23] Qin C, Schlemper J, Caballero J, Price A N, Hajnal J V, Rueckert D 2019 IEEE Trans. Med. Imaging 38 280

Google Scholar

Google Scholar

[24] Ulyanov D, Vedaldi A, Lempitsky V 2018 In: IEEE/CVF Conference on Computer Vision and Pattern Recognition Salt Lake City, USA, June 18, 2018 p9446

[25] Gong K, Catana C, Qi J Y, Li Q Z 2019 IEEE Trans. Med. Imaging 38 1655

Google Scholar

Google Scholar

[26] Gary M, Michael E, Peyman M 2019 arXiv: 1903.10176

[27] Sagel A, Roumy A, Guillemot C 2020 In: IEEE International Conference on Acoustics, Speech and Signal Processing Barcelona, Spain, May 04, 2020 p2513

[28] Liu J M, Sun Y, Xu X J, Kamilov U S 2019 In: IEEE International Conference on Acoustics, Speech and Signal Processing Brighton, Britain, May 12, 2019 p7715

[29] Hashimoto F, Ohba H, Ote K, Teramoto A, Sukada H 2019 IEEE Access 7 96594

Google Scholar

Google Scholar

[30] Dave van Veen, Ajil J, Mahdi S, Eric P, Sriram V, Alexandros G D 2018 arXiv: 1806.06438

[31] Daniel O B, Johannes L, Maximilian S 2020 Inverse Problems 36 094004

Google Scholar

Google Scholar

[32] Yoo J, Jin K H, Gupta H, Yerly J, Stuber M, Unser M 2021 IEEE Trans. Med. Imaging (Early Access)

[33] Vaswani N, Lu W 2010 IEEE Trans. Signal Process. 58 4595

Google Scholar

Google Scholar

[34] Wang Z, Bovik A C, Sheikh H R, Simoncelli E P 2004 IEEE Trans. Image Process. 13 600

Google Scholar

Google Scholar

Catalog

Metrics

- Abstract views: 8194

- PDF Downloads: 85

- Cited By: 0

DownLoad:

DownLoad: