-

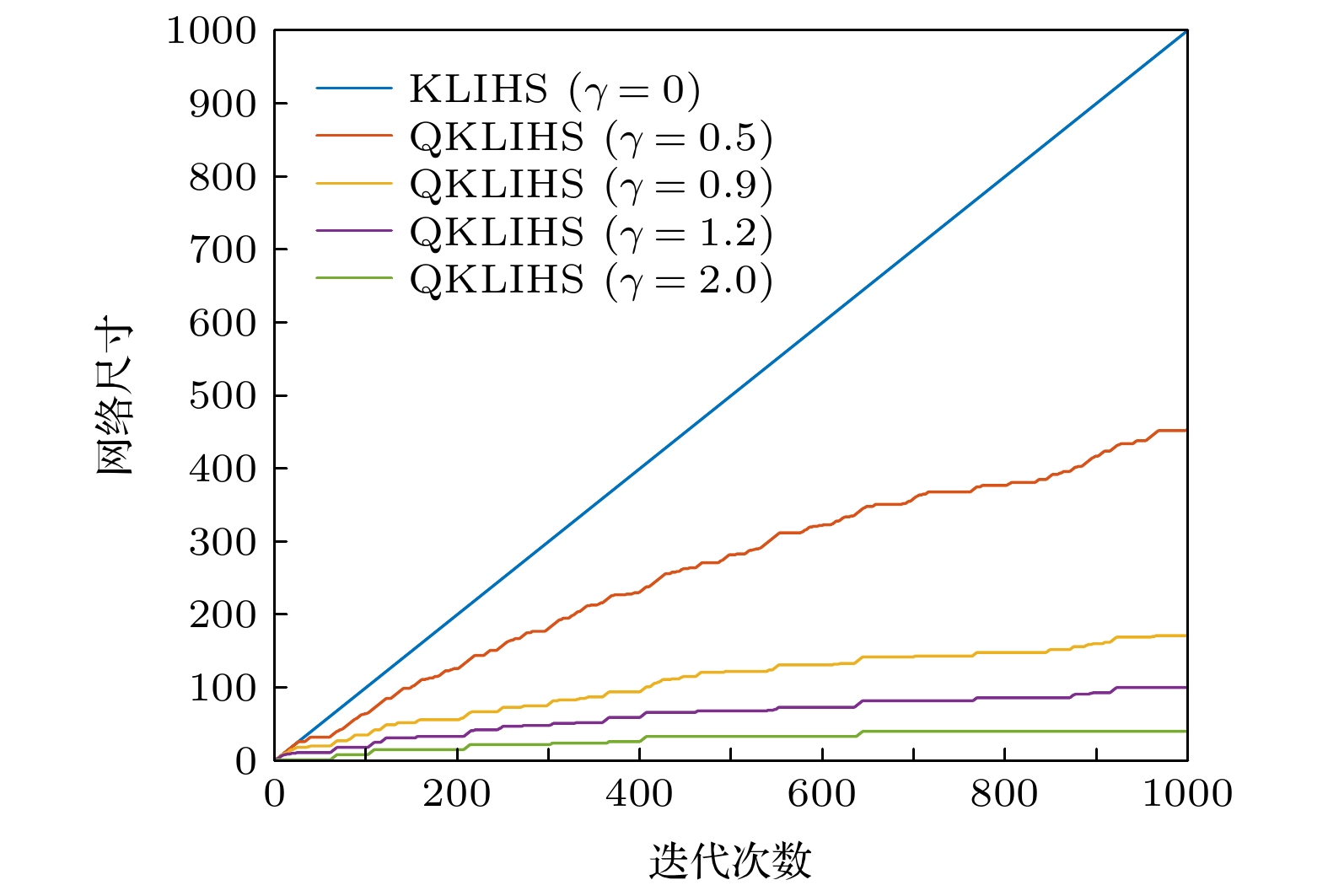

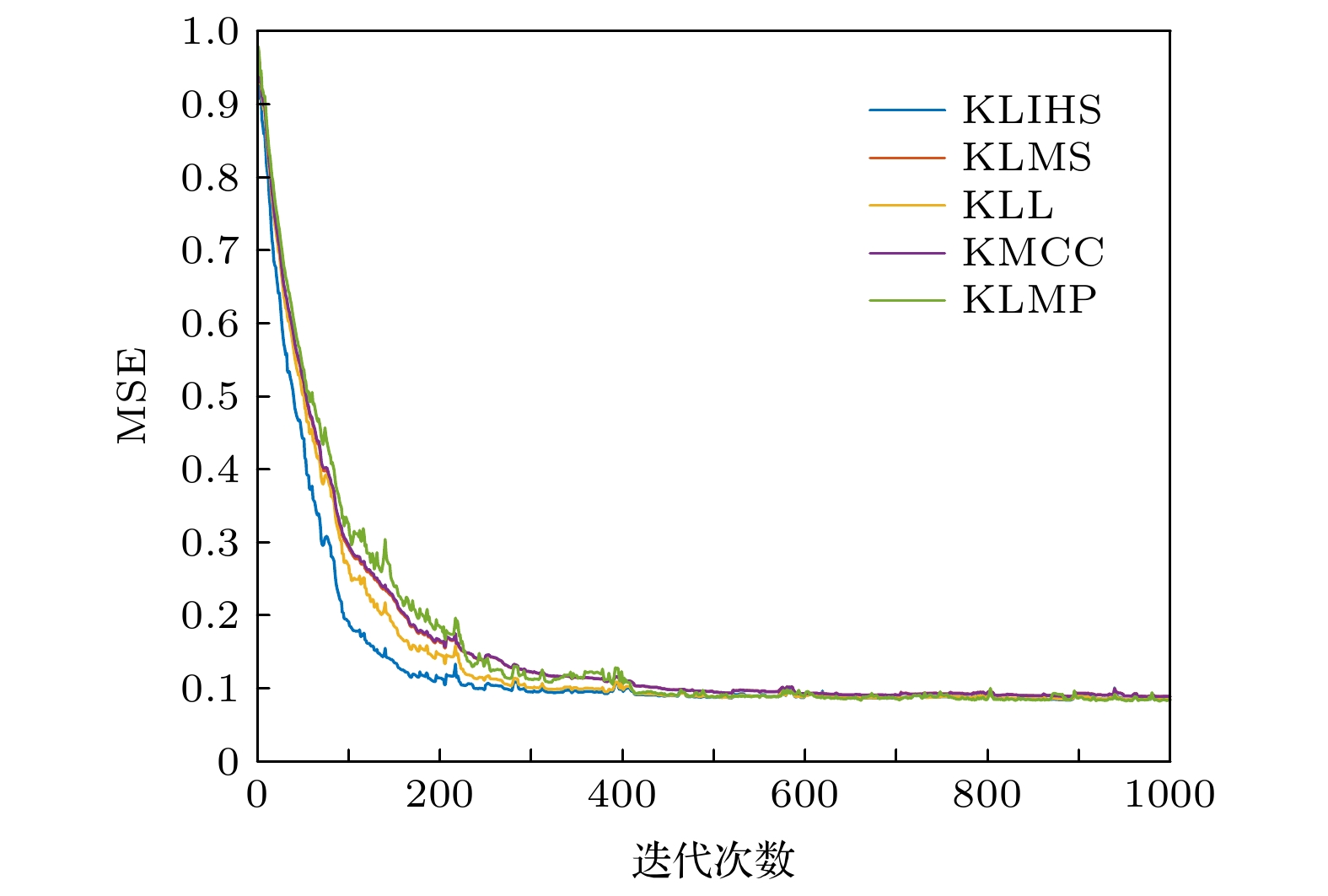

In the last few decades, the kernel method has been successfully used in the field of adaptive filtering to solve nonlinear problems. Mercer kernel is used to map data from input space to reproducing kernel Hilbert space (RKHS) by kernel adaptive filter (KAF). In regenerated kernel Hilbert spaces, the inner product can be easily calculated by computing the so-called kernel trick. The Kernel adaptive filtering algorithm is superior to common adaptive filtering algorithm in solving nonlinear problems and nonlinear channel equalization. For nonlinear problems, a robust kernel least inverse hyperbolic sine (KLIHS) algorithm is proposed by combining the kernel method with the inverse of hyperbolic sine function.The main disadvantage of KAF is that the radial-basis function (RBF) network grows with every new data sample, which increases the computational-complexity and requires more momories. The vector quantization (VQ) has been proposed to address this problem and has been successfully applied to the current kernel adaptive filtering algorithm. The main idea of the VQ method is to compress the input space through quantization to curb the network-size growth. In this paper, vector quantization is used to quantify the input spatial data, and a quantized kernel least inverse hyperbolic sine (QKLIHS) algorithm is constructed to restrain the growth of network scale. The energy conservation relation and convergence condition of quantized kernel least inverse hyperbolic sine algorithm are given. The simulation results of Mackey-Glass short-time chaotic time series prediction and nonlinear channel equalization environment show that the proposed kernel least inverse hyperbolic sine algorithm and quantized kernel least inverse hyperbolic sine algorithm have advantages in convergence speed, robustness and computational complexity.

-

Keywords:

- kernel method /

- the vector quantization /

- kernel least inverse hyperbolic sine algorithm /

- short-time chaotic time series prediction

[1] Liu W F, Príncipe J C, Haykin S 2010 Kernel Adaptive Filtering: A Comprehensive Introduction (Hoboken, NJ, USA: John Wiley & Sons) pp16–32

[2] 火元莲, 王丹凤, 龙小强, 连培君, 齐永锋 2021 70 028401

Google Scholar

Google Scholar

Huo Y L, Wang D F, Long X Q, Lian P J, Qi Y F 2021 Acta Phys. Sin. 70 028401

Google Scholar

Google Scholar

[3] Liu W, Pokharel P P, Principe J C 2008 IEEE Trans. Signal Process. 56 543

Google Scholar

Google Scholar

[4] Ma W, Duan J, Man W, Zhao H, Chen B 2017 Eng. Appl. Artif. Intel. 58 101

Google Scholar

Google Scholar

[5] Wu Q, Li Y, Zakharov Y V, Xue W 2021 Signal Process. 189 108255

Google Scholar

Google Scholar

[6] Zhao S, Chen B, Príncipe J C 2011 The 2011 International Joint Conference on Neural Networks San Jose, CA USA, 03 October 2011, p2012

[7] Engel Y, Mannor S, Meir R 2004 IEEE Trans. Signal Process. 52 2275

Google Scholar

Google Scholar

[8] Liu W, Park I, Principe J C 2009 IEEE Trans. Neural Networds 20 1950

Google Scholar

Google Scholar

[9] Chen B, Zhao S, Zhu P, Príncipe J C 2013 IEEE Trans. Neural Networks Learn. Syst. 24 1484

Google Scholar

Google Scholar

[10] Csató L, Opper M 2002 Neural Comput. 14 641

Google Scholar

Google Scholar

[11] Zhao S, Chen B, Zhu P, Príncipe J C 2013 Signal Process. 93 2759

Google Scholar

Google Scholar

[12] Chen B, Zhao S, Zhu P, Príncipe J C 2012 IEEE Trans. Neural Networks Learn. Syst. 23 22

Google Scholar

Google Scholar

[13] Engel Y, Mannor S, Meir R 2004 IEEE Transactions on Signal Processing 52 2275

[14] Wang S, Zheng Y, Duan S, Wang L, Tan H 2017 Digital Signal Process. 63 164

Google Scholar

Google Scholar

[15] Wu Z, Shi J, Xie Z, Ma W, Chen B 2015 Signal Process. 117 11

[16] Shi L, Yun L 2014 IEEE Signal Process. Lett. 21 385

Google Scholar

Google Scholar

[17] Guan S, Cheng Q, Zhao Y, Biswal B 2021 PLoS One 16 1

[18] 焦尚彬, 任超, 黄伟超, 梁炎明 2013 62 210501

Google Scholar

Google Scholar

Jiao S B, Ren C, Huang W C, Liang Y M 2013 Acta Phys. Sin. 62 210501

Google Scholar

Google Scholar

[19] 火元莲, 脱丽华, 齐永锋, 丁瑞博 2022 71 048401

Google Scholar

Google Scholar

Huo Y L, Tuo L H, Qi Y F, Ding R B 2022 Acta Phys. Sin. 71 048401

Google Scholar

Google Scholar

[20] Aalo V, Ackie A, Mukasa C 2019 Signal Process. 154 363

Google Scholar

Google Scholar

[21] Wu Q, Li Y, Jiang Z, Zhang Y 2019 IEEE Access. 7 62107

Google Scholar

Google Scholar

[22] 王世元, 史春芬, 钱国兵, 王万里 2018 67 018401

Google Scholar

Google Scholar

Wang S Y, Shi C F, Qian G B, Wang W L 2018 Acta Phys. Sin. 67 018401

Google Scholar

Google Scholar

-

表 1 KLIHS算法

Table 1. KLIHS algorithm.

初始化: 选择步长$ \mu $; 映射核宽h; $ {\boldsymbol{a} }(1) = 2\mu \dfrac{1}{ {\sqrt {1 + {d^4}\left( 1 \right)} } }d\left( 1 \right) $; ${\boldsymbol{C}}\left( 1 \right) = \left\{ {x\left( 1 \right)} \right\}$ 每获得一对新的样本$\left\{ {{\boldsymbol{x}}(n), {\boldsymbol{d}}(n)} \right\}$时 1) 计算输出值: $y\left( n \right) = \displaystyle\sum\nolimits_{j = 1}^{n - 1} { { {\boldsymbol{a} }_j}\left( n \right)\kappa \left( { {\boldsymbol{x} }\left( j \right), {\boldsymbol{x} }\left( n \right)} \right)}$ 2) 计算误差: $ e(n) = d(n) - y\left( n \right) $ 3) 添加存储新中心: ${\boldsymbol{C}}\left( n \right) = \left\{ {{\boldsymbol{C}}\left( {n - 1} \right), {\boldsymbol{x}}\left( n \right)} \right\}$ 4) 更新系数:

${~} \qquad \qquad {\boldsymbol{a} }(n) = \Big\{ { {\boldsymbol{a} }(n-1), 2\mu \dfrac{1}{ {\sqrt {1 + {e^4} (n)} } }e (n)} \Big\}$停止循环 表 2 QKLIHS算法

Table 2. QKLIHS algorithm.

初始化: 选择步长$ \mu $; 映射核宽$ h $; 量化阈值$ \gamma $; $ {\boldsymbol{a} }(1) = 2\mu \dfrac{1}{ {\sqrt {1 + {d^4}\left( 1 \right)} } }d\left( 1 \right) $; ${\boldsymbol{C} }\left( 1 \right) = \left\{ { {\boldsymbol{x} }\left( 1 \right)} \right\}$ 每获得一对新的样本$\left\{ {{\boldsymbol{x}}(n), d(n)} \right\}$时 1) 计算输出值: $y\left( n \right) = \displaystyle\sum\limits_{j = 1}^{n - 1} { { {\boldsymbol{a} }_j}\left( n \right)\kappa \left( { {\boldsymbol{x} }\left( j \right), {\boldsymbol{x} }\left( n \right)} \right)}$ 2) 计算误差: $ e(n) = d(n) - y\left( n \right) $ 3) 计算${\boldsymbol{x} }\left( n \right)$与当前字典${\boldsymbol{C} }\left( {n - 1} \right)$的欧几里得距离: ${\rm{dis} }({ {\boldsymbol{x} }(n), {(\boldsymbol{C} }({n - 1})}) = \mathop {\min }\limits_{1 \leqslant j \leqslant {\rm{size} }({ {\boldsymbol C } ({n - 1})})} \left\| { {\boldsymbol{x} }( n) - { {\boldsymbol{C} }_j}({n - 1})} \right\|$ 4) 若${\rm{dis}}\left( { {\boldsymbol{x} }\left( n \right), {\boldsymbol{C} }\left( {n - 1} \right)} \right) > \gamma$, 更新字典: ${ {\boldsymbol{x} }_{\rm{q}}}\left( n \right) = {\boldsymbol{x} }\left( n \right)$, ${\boldsymbol{C} }\left( n \right) = \left\{ { {\boldsymbol{C} }\left( {n - 1} \right), { {\boldsymbol{x} }_{\rm{q}}}\left( n \right)} \right\}$ 添加相应系数向量$ {\boldsymbol{a} }\left( n \right) = \left[ { {\boldsymbol{a} }\left( {n - 1} \right), 2\mu \dfrac{1}{ {\sqrt {1 + {e^4}\left( n \right)} } }e\left( n \right)} \right] $ 否则, 保持字典不变: ${\boldsymbol{C} }\left( n \right) = {\boldsymbol{C} }\left( {n - 1} \right)$ 计算与当前数据最近字典元素的下标$j * = \mathop {\arg \min }\limits_{1 \leqslant j \leqslant {\rm{size}}\left( { {\boldsymbol{C} }\left( {n - 1} \right)} \right)} \left\| { {\boldsymbol{x} }\left( n \right) - { {\boldsymbol{C} }_j}\left( {n - 1} \right)} \right\|$ 将${ {\boldsymbol{C} }_{j * } }\left( {n - 1} \right)$作为当前数据的量化值, 并更新系数向量: ${ {\boldsymbol{x} }_{\rm{q}}}\left( n \right) = { {\boldsymbol{C} }_{j * } }\left( {n - 1} \right)$, $ { {\boldsymbol{a} }_{j * } }\left( n \right) = { {\boldsymbol{a} }_{j * } }\left( {n - 1} \right) + 2\mu \dfrac{1}{ {\sqrt {1 + {e^4}\left( n \right)} } }e\left( n \right) $ 停止循环 表 3 在短时混沌时间序列预测下不同算法的均值±标准偏差

Table 3. The mean standard deviation of different algorithms under short-term chaotic time series prediction.

算法 均值$ \pm $偏差 KLMS $ 0.2256 \pm 0.1082 $ KMCC $ 0.1847 \pm 0.0034 $ KLMP $ 0.1831 \pm 0.0061 $ KLL $ 0.1807 \pm 0.0047 $ KLIHS $ 0.1773 \pm 0.0045 $ 表 4 在短时混沌时间序列预测下不同量化阈值的QKLIHS算法的均方误差与网络尺寸比较

Table 4. Comparison of mean square error and network size of QKLIHS algorithm with different quantization thresholds

$ \gamma $ under short-time chaotic time series prediction量化阈值 误差均值$ \pm $偏差 网络尺寸 0 $ 0.1738 \pm 0.0046 $ 1000 0.5 $ 0.1759 \pm 0.0050 $ 454 0.9 $ 0.1793 \pm 0.0041 $ 172 1.2 $ 0.1820 \pm 0.0045 $ 101 2.0 $ 0.2149 \pm 0.0056 $ 41 表 5 在非线性信道均衡下不同量化阈值

$ \gamma $ 的QKLIHS算法的稳态误差均值与网络尺寸Table 5. Steady-state error mean and network size of QKLIHS algorithm with different quantization threshold

$ \gamma $ under nonlinear channel equalization.量化阈值 误差均值$ \pm $偏差 网络尺寸 0 $ 0.0422 \pm 0.0060 $ 1000 0.5 $ 0.0454 \pm 0.0065 $ 127 0.9 $ 0.0489 \pm 0.0063 $ 68 1.2 $ 0.0560 \pm 0.0080 $ 47 2.0 $ 0.0866 \pm 0.0095 $ 26 -

[1] Liu W F, Príncipe J C, Haykin S 2010 Kernel Adaptive Filtering: A Comprehensive Introduction (Hoboken, NJ, USA: John Wiley & Sons) pp16–32

[2] 火元莲, 王丹凤, 龙小强, 连培君, 齐永锋 2021 70 028401

Google Scholar

Google Scholar

Huo Y L, Wang D F, Long X Q, Lian P J, Qi Y F 2021 Acta Phys. Sin. 70 028401

Google Scholar

Google Scholar

[3] Liu W, Pokharel P P, Principe J C 2008 IEEE Trans. Signal Process. 56 543

Google Scholar

Google Scholar

[4] Ma W, Duan J, Man W, Zhao H, Chen B 2017 Eng. Appl. Artif. Intel. 58 101

Google Scholar

Google Scholar

[5] Wu Q, Li Y, Zakharov Y V, Xue W 2021 Signal Process. 189 108255

Google Scholar

Google Scholar

[6] Zhao S, Chen B, Príncipe J C 2011 The 2011 International Joint Conference on Neural Networks San Jose, CA USA, 03 October 2011, p2012

[7] Engel Y, Mannor S, Meir R 2004 IEEE Trans. Signal Process. 52 2275

Google Scholar

Google Scholar

[8] Liu W, Park I, Principe J C 2009 IEEE Trans. Neural Networds 20 1950

Google Scholar

Google Scholar

[9] Chen B, Zhao S, Zhu P, Príncipe J C 2013 IEEE Trans. Neural Networks Learn. Syst. 24 1484

Google Scholar

Google Scholar

[10] Csató L, Opper M 2002 Neural Comput. 14 641

Google Scholar

Google Scholar

[11] Zhao S, Chen B, Zhu P, Príncipe J C 2013 Signal Process. 93 2759

Google Scholar

Google Scholar

[12] Chen B, Zhao S, Zhu P, Príncipe J C 2012 IEEE Trans. Neural Networks Learn. Syst. 23 22

Google Scholar

Google Scholar

[13] Engel Y, Mannor S, Meir R 2004 IEEE Transactions on Signal Processing 52 2275

[14] Wang S, Zheng Y, Duan S, Wang L, Tan H 2017 Digital Signal Process. 63 164

Google Scholar

Google Scholar

[15] Wu Z, Shi J, Xie Z, Ma W, Chen B 2015 Signal Process. 117 11

[16] Shi L, Yun L 2014 IEEE Signal Process. Lett. 21 385

Google Scholar

Google Scholar

[17] Guan S, Cheng Q, Zhao Y, Biswal B 2021 PLoS One 16 1

[18] 焦尚彬, 任超, 黄伟超, 梁炎明 2013 62 210501

Google Scholar

Google Scholar

Jiao S B, Ren C, Huang W C, Liang Y M 2013 Acta Phys. Sin. 62 210501

Google Scholar

Google Scholar

[19] 火元莲, 脱丽华, 齐永锋, 丁瑞博 2022 71 048401

Google Scholar

Google Scholar

Huo Y L, Tuo L H, Qi Y F, Ding R B 2022 Acta Phys. Sin. 71 048401

Google Scholar

Google Scholar

[20] Aalo V, Ackie A, Mukasa C 2019 Signal Process. 154 363

Google Scholar

Google Scholar

[21] Wu Q, Li Y, Jiang Z, Zhang Y 2019 IEEE Access. 7 62107

Google Scholar

Google Scholar

[22] 王世元, 史春芬, 钱国兵, 王万里 2018 67 018401

Google Scholar

Google Scholar

Wang S Y, Shi C F, Qian G B, Wang W L 2018 Acta Phys. Sin. 67 018401

Google Scholar

Google Scholar

Catalog

Metrics

- Abstract views: 5622

- PDF Downloads: 49

- Cited By: 0

DownLoad:

DownLoad: