-

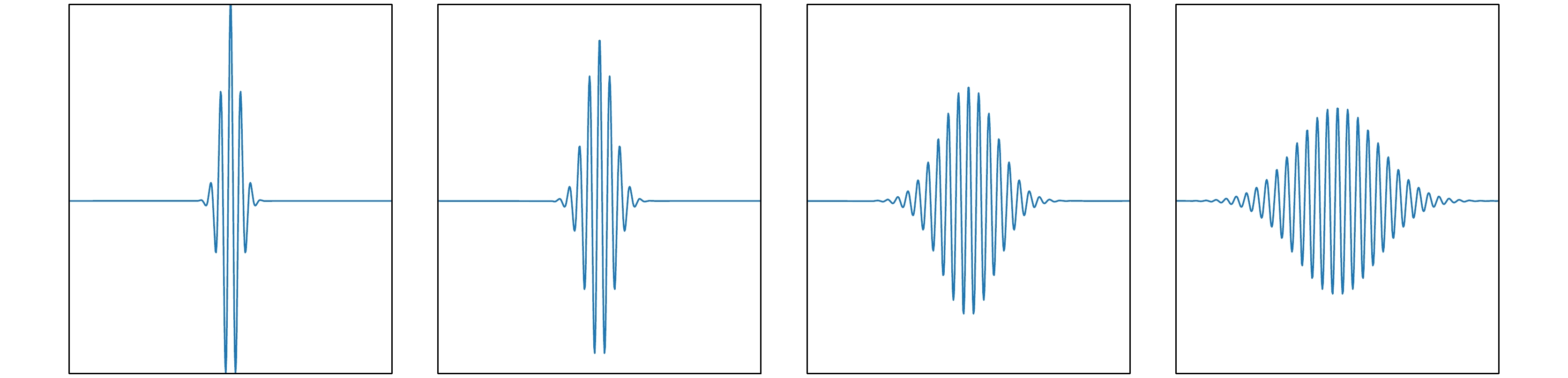

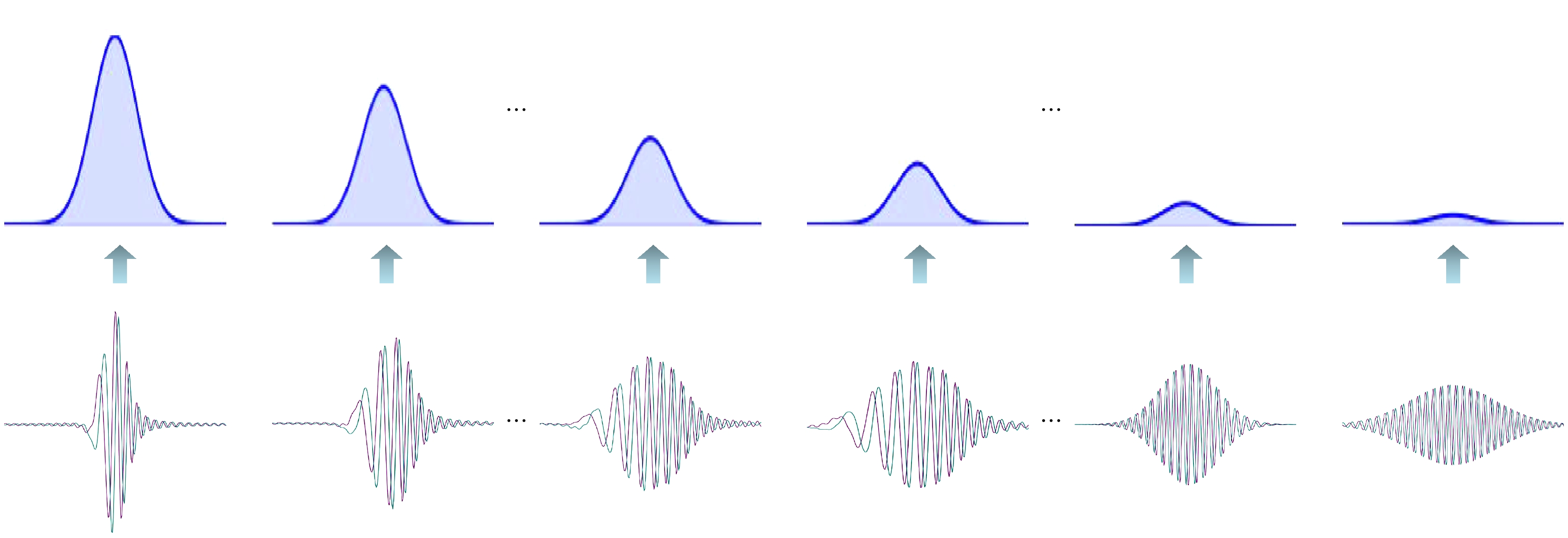

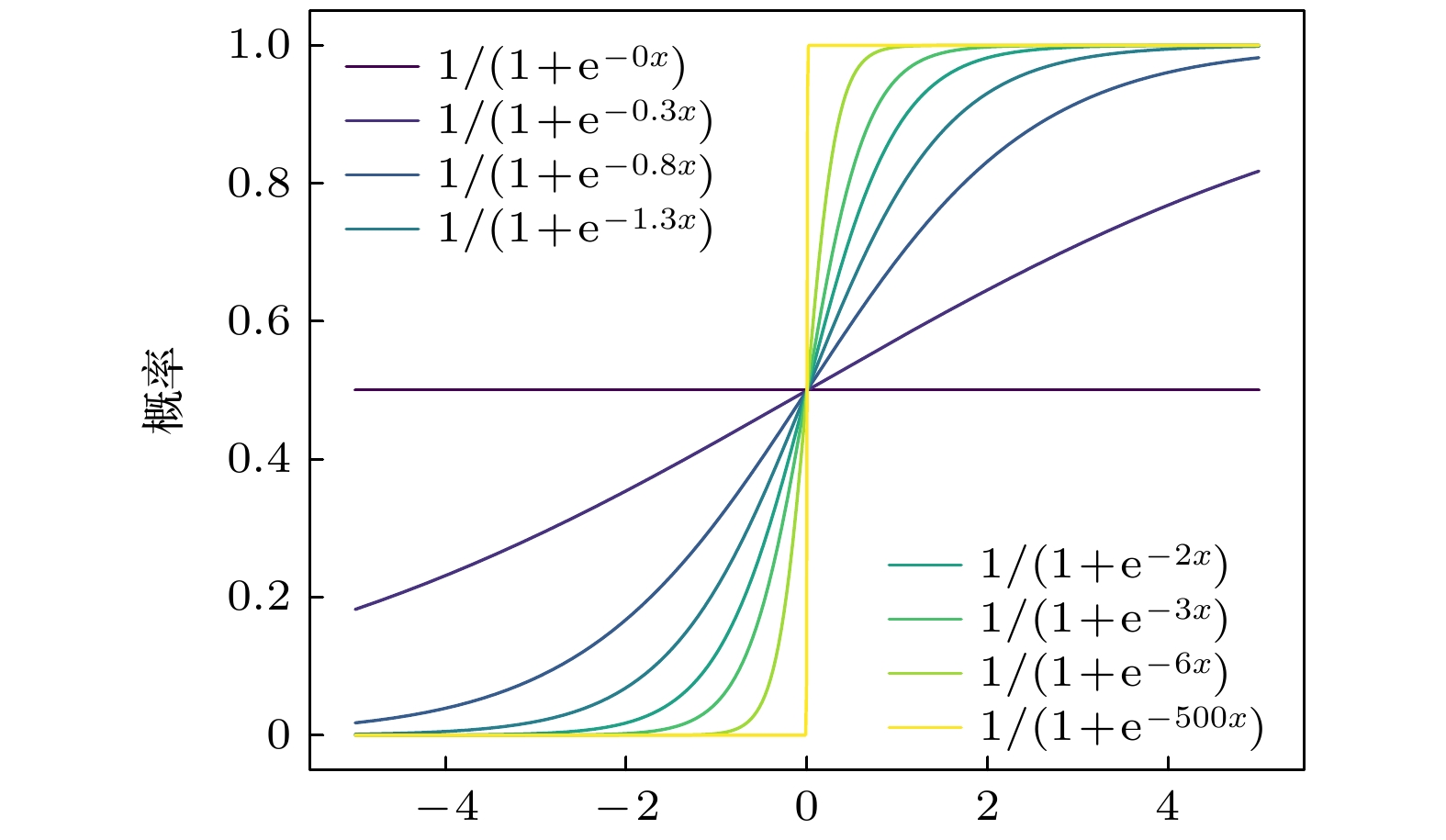

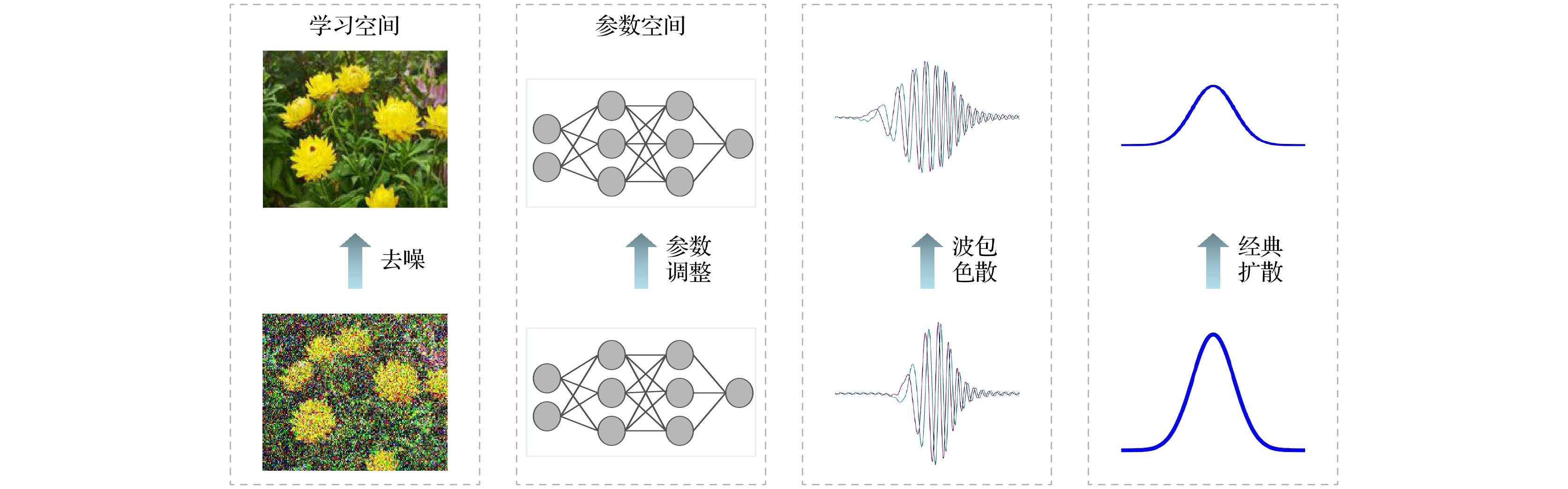

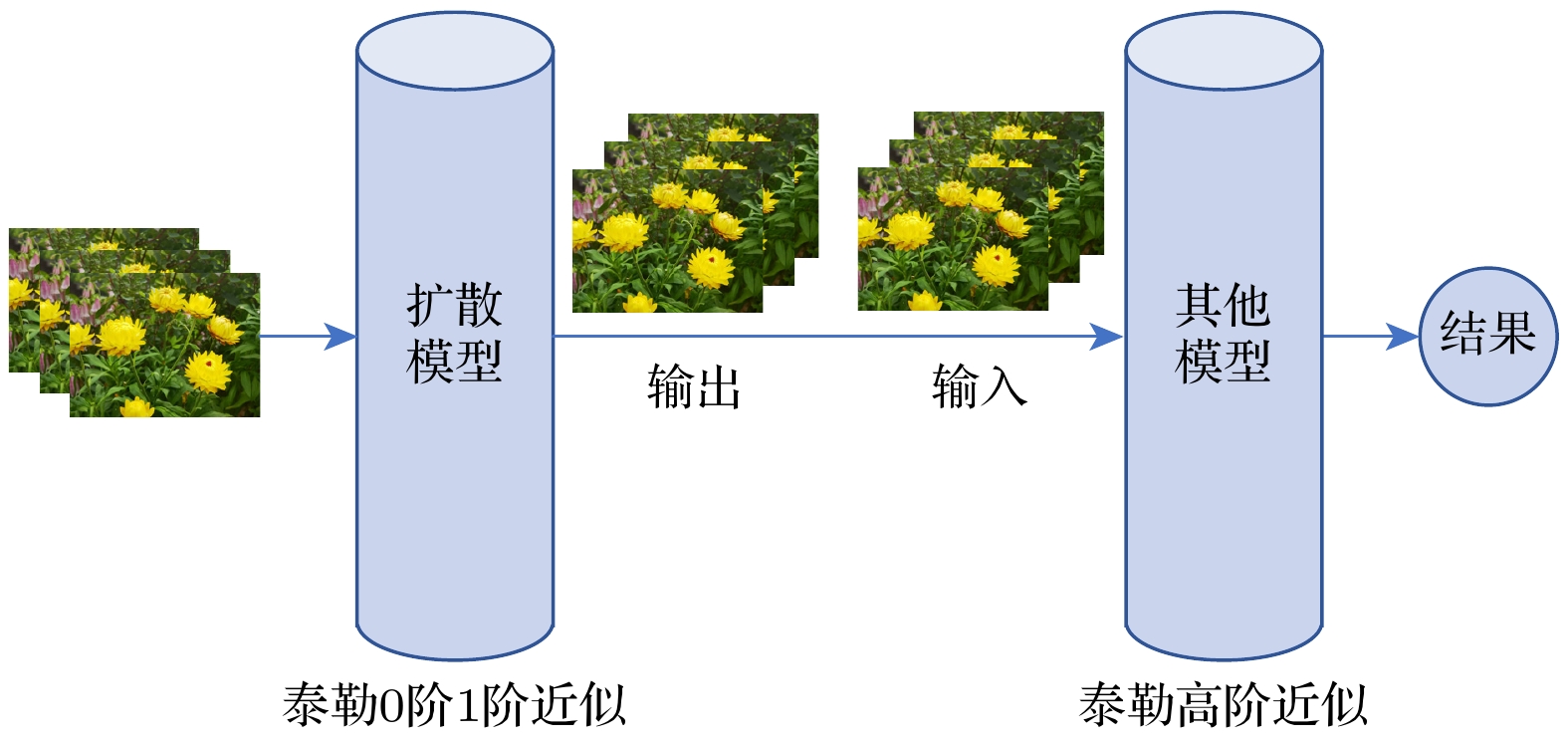

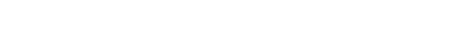

In order to solve the current lack of rigorous theoretical models in the machine learning process, in this paper the iterative motion process of machine learning is modeled by using quantum dynamic method based on the principles of first-principles thinking. This approach treats the iterative evolution of algorithms as a physical motion process, defines a generalized objective function in the parameter space of machine learning algorithms, and regards the iterative process of machine learning as the process of seeking the optimal value of this generalized objective function. In physical terms, this process corresponds to the system reaching its ground energy state. Since the dynamic equation of a quantum system is the Schrödinger equation, we can obtain the quantum dynamic equation that describes the iterative process of machine learning by treating the generalized objective function as the potential energy term in the Schrödinger equation. Therefore, machine learning is the process of seeking the ground energy state of the quantum system constrained by a generalized objective function. The quantum dynamic equation for machine learning transforms the iterative process into a time-dependent partial differential equation for precise mathematical representation, enabling the use of physical and mathematical theories to study the iterative process of machine learning. This provides theoretical support for implementing the iterative process of machine learning by using quantum computers. In order to further explain the iterative process of machine learning on classical computers by using quantum dynamic equation, the Wick rotation is used to transform the quantum dynamic equation into a thermodynamic equation, demonstrating the convergence of the time evolution process in machine learning. The system will be transformed into the ground energy state as time approaches infinity. Taylor expansion is used to approximate the generalized objective function, which has no analytical expression in the parameter space. Under the zero-order Taylor approximation of the generalized objective function, the quantum dynamic equation and thermodynamic equation for machine learning degrade into the free-particle equation and diffusion equation, respectively. This result indicates that the most basic dynamic processes during the iteration of machine learning on quantum computers and classical computers are wave packet dispersion and wave packet diffusion, respectively, thereby explaining, from a dynamic perspective, the basic principles of diffusion models that have been successfully utilized in the generative neural networks in recent years. Diffusion models indirectly realize the thermal diffusion process in the parameter space by adding Gaussian noise to and removing Gaussian noise from the image, thereby optimizing the generalized objective function in the parameter space. The diffusion process is the dynamic process in the zero-order approximation of the generalized objective function. Meanwhile, we also use the thermodynamic equation of machine learning to derive the Softmax function and Sigmoid function, which are commonly used in artificial intelligence. These results show that the quantum dynamic method is an effective theoretical approach to studying the iterative process of machine learning, which provides a rigorous mathematical and physical model for studying the iterative process of machine learning on both quantum computers and classical computers.

-

Keywords:

- quantum dynamics /

- machine learning /

- diffusion model /

- Schrödinger equation

[1] Metropolis N, Rosenbluth A W, Rosenbluth M N, Teller A H, Teller E 1953 J. Chem. Phys. 21 1087

Google Scholar

Google Scholar

[2] Kirkpatrick S, Gelatt C D, Vecchi M P 1983 Science 220 671

Google Scholar

Google Scholar

[3] Finnila A B, Gomez M A, Sebenik C, Stenson C, Doll J D 1994 Chem. Phys. Lett. 219 343

Google Scholar

Google Scholar

[4] Wang F, Wang P 2024 Quantum Inf. Process. 23 66

Google Scholar

Google Scholar

[5] 王鹏, 辛罡 2023 自动化学报 49 2396

Google Scholar

Google Scholar

Wang P, Xin G 2023 Acta Autom. Sin. 49 2396

Google Scholar

Google Scholar

[6] 王鹏, 黄焱, 任超, 郭又铭 2013 电子学报 41 2468

Google Scholar

Google Scholar

Wang P, Huang Y, Ren C, Guo Y 2013 Acta Electron. Sin. 41 2468

Google Scholar

Google Scholar

[7] 王鹏, 王方 2022 电子科技大学学报(自然科学版) 51 2

Google Scholar

Google Scholar

Wang P, Wang F 2022 J. Univ. Electron. Sci. Technol. (Nat. Sci. Ed.) 51 2

Google Scholar

Google Scholar

[8] Johnson M W, Amin M H S, Gildert S 2011 Nature 473 194

Google Scholar

Google Scholar

[9] Sohl-Dickstein J, Weiss E, Maheswaranathan N, Ganguli S 2015 Proceedings of the 32 nd International Conference on Machine Learning Lille, France, July 7–9, 2015 p2256

[10] Song Y, Sohl-Dickstein J, Kingma D P, Kumar A, Ermon S, Poole B 2020 arXiv: 2011.13456 [cs.LG]

[11] Xin G, Wang P, Jiao Y 2021 Expert. Syst. Appl. 185 115615

Google Scholar

Google Scholar

[12] Jin J, Wang P 2021 Swarm Evol. Comput. 65 100916

Google Scholar

Google Scholar

[13] Wick G C 1954 Phys. Rev. 96 1124

Google Scholar

Google Scholar

[14] Dhariwal P, Nichol A 2021 Advances in Neural Information Processing Systems (NeurIPS 2021) December 7–10, 2021 (Virtual-only Conference) p8780

[15] Ho J, Jain A, Abbeel P 2020 Advances in Neural Information Processing Systems (NeurIPS 2020) December 6–12, 2020 (Virtual-only Conference) p6840

[16] Nichol A Q, Dhariwal P 2021 Proceedings of the 38th International Conference on Machine Learning July 18–24, 2021 (Virtual-only Conference) p8162

[17] Lim S, Yoon E, Byun T, Kang T, Kim S, Lee K, Choi S 2023 Advances in Neural Information Processing Systems (NeurIPS 2023) New Orleans, USA, December 10–16, 2023 p37799

[18] Anderson J B 1975 J. Chem. Phys. 63 1499

Google Scholar

Google Scholar

[19] Kosztin I, Faber B, Schulten K 1996 Am. J. Phys. 64 633

Google Scholar

Google Scholar

[20] Haghighi M K, Lüchow A 2017 J. Phys. Chem. A 121 6165

Google Scholar

Google Scholar

[21] Jeong J, Shin J 2023 Advances in Neural Information Processing Systems (NeurIPS 2023) New Orleans, USA, December 10–16, 2023 p67374

[22] Morawietz T, Artrith N 2021 J. Comput. Aid. Mol. Des. 35 557

Google Scholar

Google Scholar

-

[1] Metropolis N, Rosenbluth A W, Rosenbluth M N, Teller A H, Teller E 1953 J. Chem. Phys. 21 1087

Google Scholar

Google Scholar

[2] Kirkpatrick S, Gelatt C D, Vecchi M P 1983 Science 220 671

Google Scholar

Google Scholar

[3] Finnila A B, Gomez M A, Sebenik C, Stenson C, Doll J D 1994 Chem. Phys. Lett. 219 343

Google Scholar

Google Scholar

[4] Wang F, Wang P 2024 Quantum Inf. Process. 23 66

Google Scholar

Google Scholar

[5] 王鹏, 辛罡 2023 自动化学报 49 2396

Google Scholar

Google Scholar

Wang P, Xin G 2023 Acta Autom. Sin. 49 2396

Google Scholar

Google Scholar

[6] 王鹏, 黄焱, 任超, 郭又铭 2013 电子学报 41 2468

Google Scholar

Google Scholar

Wang P, Huang Y, Ren C, Guo Y 2013 Acta Electron. Sin. 41 2468

Google Scholar

Google Scholar

[7] 王鹏, 王方 2022 电子科技大学学报(自然科学版) 51 2

Google Scholar

Google Scholar

Wang P, Wang F 2022 J. Univ. Electron. Sci. Technol. (Nat. Sci. Ed.) 51 2

Google Scholar

Google Scholar

[8] Johnson M W, Amin M H S, Gildert S 2011 Nature 473 194

Google Scholar

Google Scholar

[9] Sohl-Dickstein J, Weiss E, Maheswaranathan N, Ganguli S 2015 Proceedings of the 32 nd International Conference on Machine Learning Lille, France, July 7–9, 2015 p2256

[10] Song Y, Sohl-Dickstein J, Kingma D P, Kumar A, Ermon S, Poole B 2020 arXiv: 2011.13456 [cs.LG]

[11] Xin G, Wang P, Jiao Y 2021 Expert. Syst. Appl. 185 115615

Google Scholar

Google Scholar

[12] Jin J, Wang P 2021 Swarm Evol. Comput. 65 100916

Google Scholar

Google Scholar

[13] Wick G C 1954 Phys. Rev. 96 1124

Google Scholar

Google Scholar

[14] Dhariwal P, Nichol A 2021 Advances in Neural Information Processing Systems (NeurIPS 2021) December 7–10, 2021 (Virtual-only Conference) p8780

[15] Ho J, Jain A, Abbeel P 2020 Advances in Neural Information Processing Systems (NeurIPS 2020) December 6–12, 2020 (Virtual-only Conference) p6840

[16] Nichol A Q, Dhariwal P 2021 Proceedings of the 38th International Conference on Machine Learning July 18–24, 2021 (Virtual-only Conference) p8162

[17] Lim S, Yoon E, Byun T, Kang T, Kim S, Lee K, Choi S 2023 Advances in Neural Information Processing Systems (NeurIPS 2023) New Orleans, USA, December 10–16, 2023 p37799

[18] Anderson J B 1975 J. Chem. Phys. 63 1499

Google Scholar

Google Scholar

[19] Kosztin I, Faber B, Schulten K 1996 Am. J. Phys. 64 633

Google Scholar

Google Scholar

[20] Haghighi M K, Lüchow A 2017 J. Phys. Chem. A 121 6165

Google Scholar

Google Scholar

[21] Jeong J, Shin J 2023 Advances in Neural Information Processing Systems (NeurIPS 2023) New Orleans, USA, December 10–16, 2023 p67374

[22] Morawietz T, Artrith N 2021 J. Comput. Aid. Mol. Des. 35 557

Google Scholar

Google Scholar

Catalog

Metrics

- Abstract views: 3688

- PDF Downloads: 271

- Cited By: 0

DownLoad:

DownLoad: