-

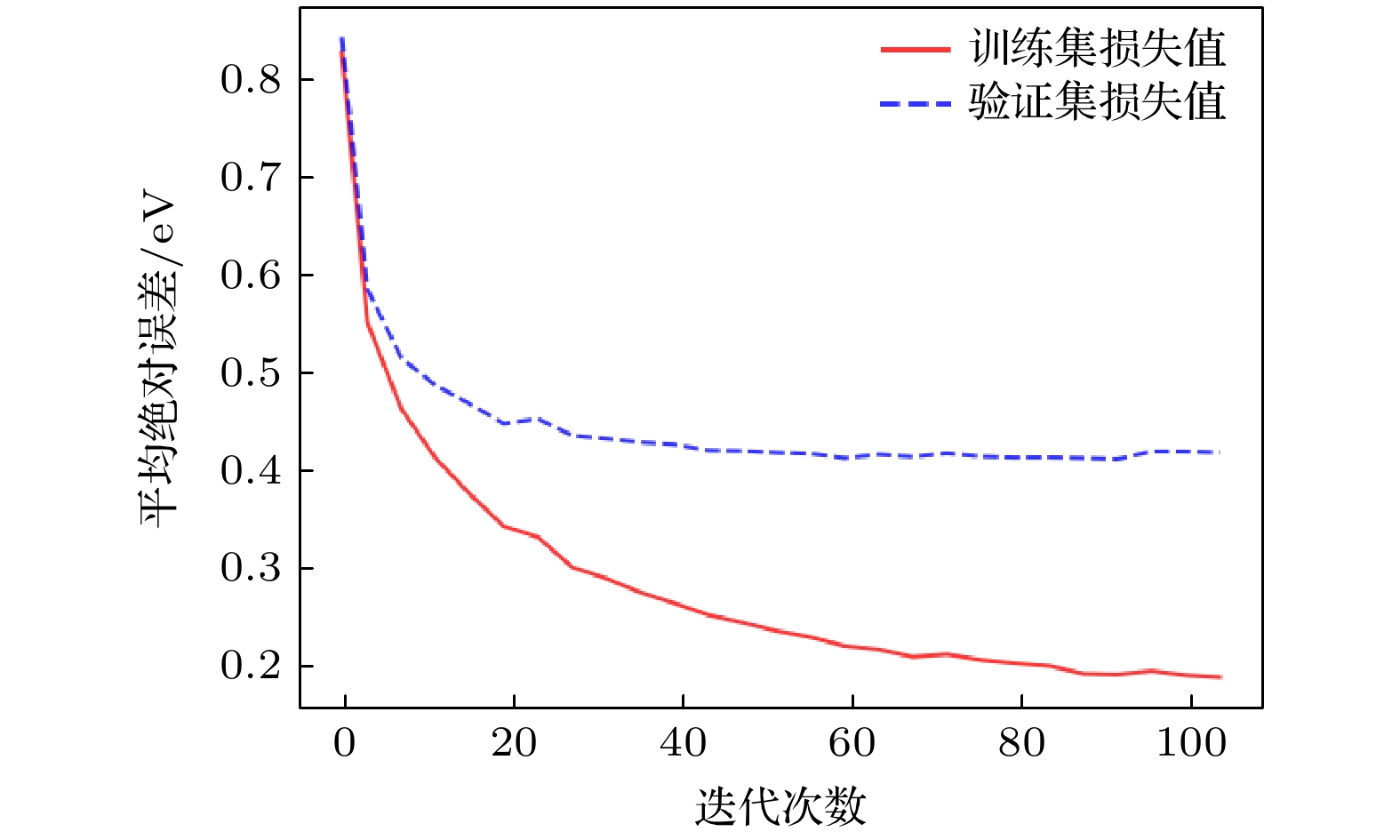

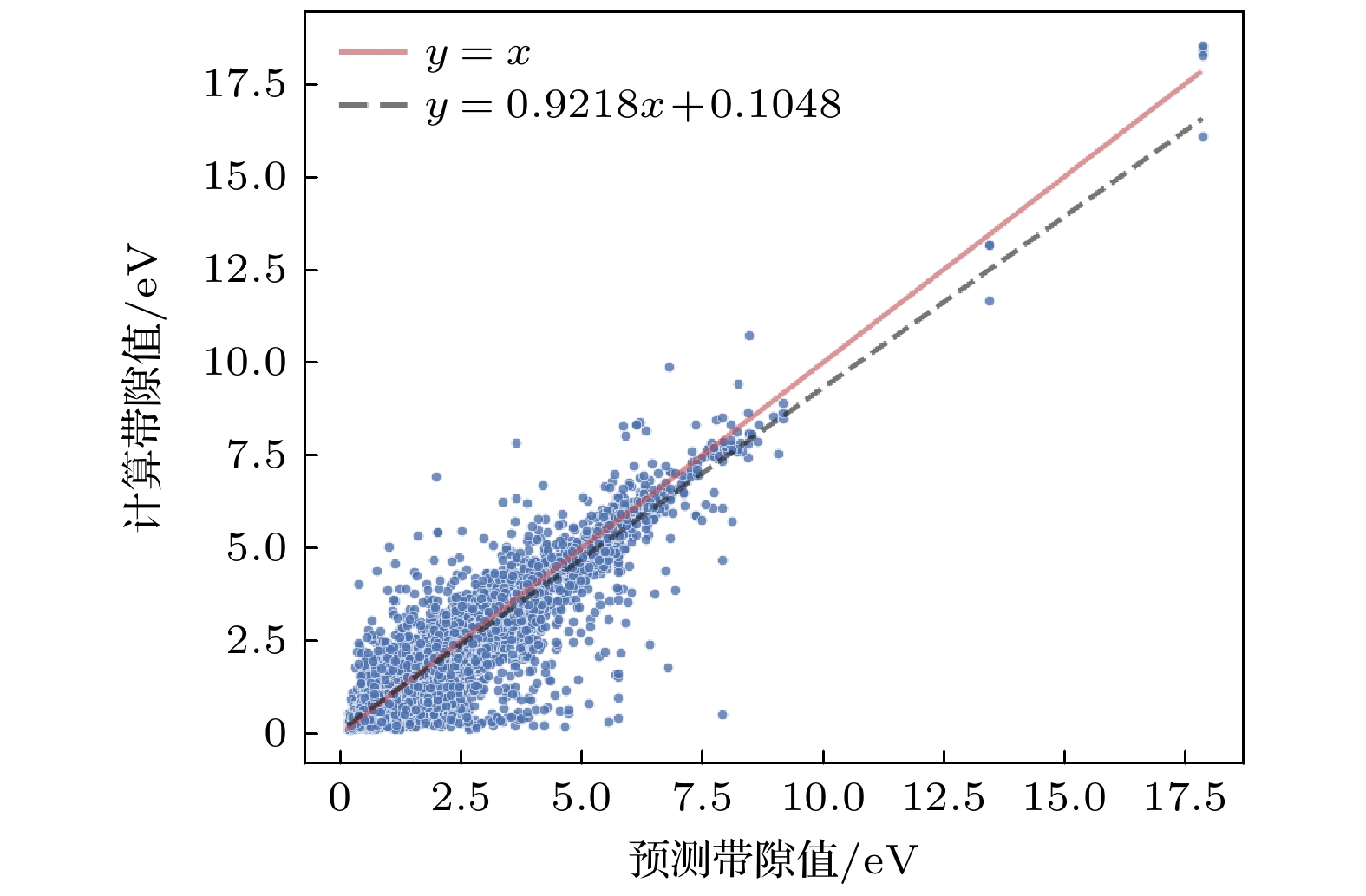

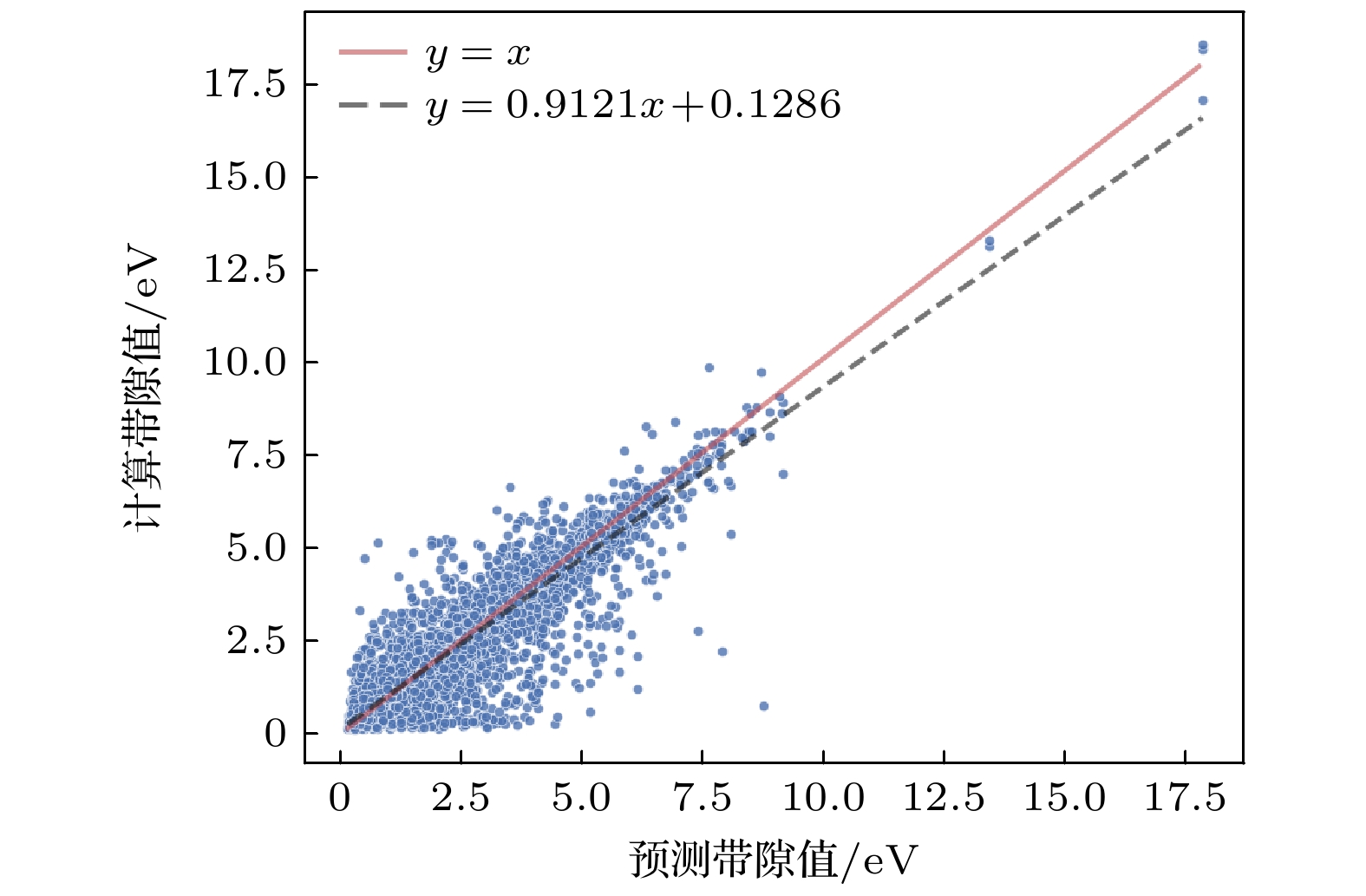

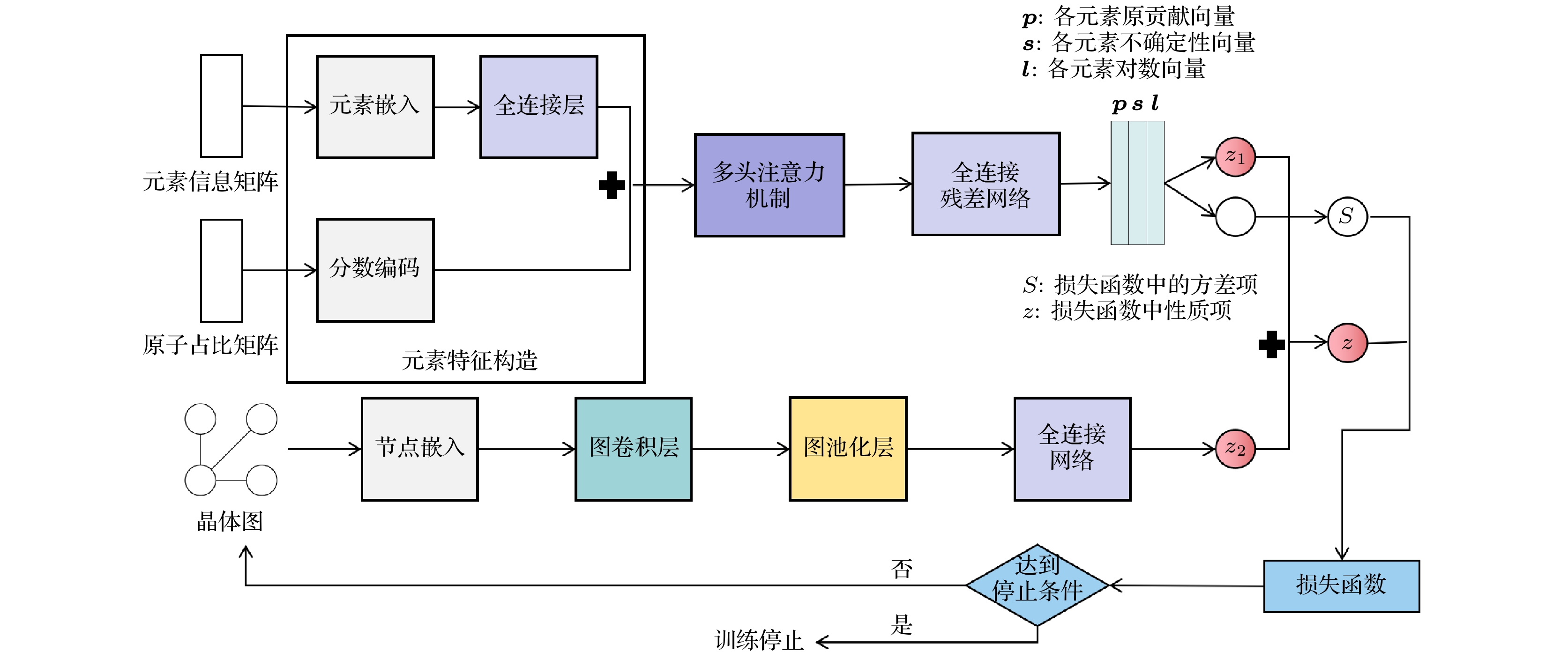

针对快速获取钙钛矿材料带隙值的问题, 建立特征融合神经网络模型(CGCrabNet), 利用迁移学习策略对钙钛矿材料的带隙进行预测. CGCrabNet从材料的化学方程式和晶体结构两方面提取特征, 并拟合特征和带隙之间的映射, 是一个端到端的神经网络模型. 在开放量子材料数据库中数据(OQMD数据集)预训练的基础上, 通过仅175条钙钛矿材料数据对CGCrabNet参数进行微调, 以提高模型的稳健性. 数值实验结果表明, CGCrabNet在OQMD数据集上对带隙的预测误差比基于注意力的成分限制网络(CrabNet)降低0.014 eV; 本文建立的模型对钙钛矿材料预测的平均绝对误差为0.374 eV, 分别比随机森林回归、支持向量机回归和梯度提升回归的预测误差降低了0.304 eV、0.441 eV和0.194 eV; 另外, 模型预测的SrHfO3和RbPaO3等钙钛矿材料的带隙与第一性原理计算的带隙相差小于0.05 eV, 这说明CGCrabNet可以快速准确地预测钙钛矿材料的性质, 加速新材料的研发过程.

The band gap is a key physical quantity in material design. First-principles calculations based on density functional theory can approximately predict the band gap, which often requires significant computational resources and time. Deep learning models have the advantages of good fitting capability and automatic feature extraction from the data, and are gradually used to predict the band gap. In this paper, aiming at the problem of quickly obtaining the band gap value of perovskite material, a feature fusion neural network model, named CGCrabNet, is established, and the transfer learning strategy is used to predict the band gap of perovskite material. The CGCrabNet extracts features from both chemical equation and crystal structure of materials, and fits the mapping between feature and band gap. It is an end-to-end neural network model. Based on the pre-training data obtained from the Open Quantum Materials Database (OQMD dataset), the CGCrabNet parameters can be fine-tuned by using only 175 perovskite material data to improve the robustness of the model. The numerical and experimental results show that the prediction error of the CGCrabNet model for band gap prediciton based on the OQMD dataset is 0.014 eV, which is lower than that obtained from the prediction based on compositionally restricted attention-based network (CrabNet). The mean absolute error of the model developed in this paper for predicting perovskite materials is 0.374 eV, which is 0.304 eV, 0.441 eV and 0.194 eV lower than that obtained from random forest regression, support vector machine regression and gradient boosting regression, respectively. The mean absolute error of the test set of CGCrabNet trained only by using perovskite data is 0.536 eV, and the mean absolute error of the pre-trained CGCrabNet decreases by 0.162 eV, which indicates that the transfer learning strategy plays a significant role in improving the prediction accuracy of small data sets (perovskite material data sets). The difference between the predicted band gap of some perovskite materials such as SrHfO3 and RbPaO3 by the model and the band gap calculated by first-principles is less than 0.05 eV, which indicates that the CGCrabNet can quickly and accurately predict the properties of new materials and accelerate the development process of new materials. -

Keywords:

- feature fusion neural network /

- regression model /

- band gap evaluation /

- transfer learning

[1] 范晓丽 2015 中国材料进展 34 689

Google Scholar

Google Scholar

Fan X L 2015 Mater. China 34 689

Google Scholar

Google Scholar

[2] 万新阳, 章烨辉, 陆帅华, 吴艺蕾, 周跫桦, 王金兰 2022 71 177101

Google Scholar

Google Scholar

Wan X Y, Zhang Y H, Lu S H, Wu Y L, Zhou Q H, Wang J L 2022 Acta Phys. Sin. 71 177101

Google Scholar

Google Scholar

[3] Xie T, Grossman J C 2018 Phys. Rev. Lett. 120 145301

Google Scholar

Google Scholar

[4] Chen C, Ye W K, Zuo Y X, Zheng C, Ong S P 2019 Chem. Mater. 31 3564

Google Scholar

Google Scholar

[5] Karamad M, Magar R, Shi Y T, Siahrostami S, Gates L D, Farimani A B 2020 Phys. Rev. Materials 4 093801

Google Scholar

Google Scholar

[6] Jha D, Ward L, Paul A, Liao W K, Choudhary A, Wolverton C, Agrawal A 2018 Sci. Rep. 8 17593

Google Scholar

Google Scholar

[7] Goodall R E A, Lee A A 2020 Nat. Commun. 11 6280

Google Scholar

Google Scholar

[8] Wang A Y T, Kauwe S K, Murdock R J, Sparks T D 2021 NPJ Comput. Mater. 7 77

Google Scholar

Google Scholar

[9] 胡扬, 张胜利, 周文瀚, 刘高豫, 徐丽丽, 尹万健, 曾海波 2023 硅酸盐学报 51 452

Google Scholar

Google Scholar

Hu Y, Zhang S L, Zhou W H, Liu G Y, Xu L L, Yin W J, Zeng H B 2023 J. Chin. Chem. Soc. 51 452

Google Scholar

Google Scholar

[10] Guo Z, Lin B 2021 Sol. Energy 228 689

Google Scholar

Google Scholar

[11] Gao Z Y, Zhang H W, Mao G Y, Ren J N, Chen Z H, Wu C C, Gates I D, Yang W J, Ding X L, Yao J X 2021 Appl. Surf. Sci. 568 150916

Google Scholar

Google Scholar

[12] Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez A N, Kaiser L, Polosukhin I 2017 arXiv: 1706.03762v5 [cs. CL

[13] Nix D A, Weigend A S 1994 Proceedings of 1994 Ieee International Conference on Neural Networks (ICNN’94) Orlando, FL, USA, 28 June–02 July, 1994 p55

[14] You Y, Li J, Reddi S, et al. 2020 arXiv: 1904.00962v5 [cs. LG

[15] Smith L N 2017 arXiv: 1506.01186v6 [cs. CV

[16] Saal J E, Kirklin S, Aykol M, Meredig B, Wolverton C 2013 JOM 65 1501

Google Scholar

Google Scholar

[17] Jain A, Ong S P, Hautier G, Chen W, Richards W D, Dacek S, Cholia S, Gunter D, Skinner D, Ceder G, Persson K A 2013 APL Mater. 1 011002

Google Scholar

Google Scholar

[18] Yamamoto T 2019 Crystal Graph Neural Networks for Data Mining in Materials Science (Yokohama: Research Institute for Mathematical and Computational Sciences, LLC

[19] Kirklin S, Saal J E, Meredig B, Thompson A, Doak J W, Aykol M, Rühl S, Wolverton C 2015 NPJ Comput. Mater. 1 15

Google Scholar

Google Scholar

[20] Calfa B A, Kitchin J R 2016 AIChE J. 62 2605

Google Scholar

Google Scholar

[21] Ward L, Agrawal A, Choudhary A, Wolverton C 2016 NPJ Comput. Mater. 2 16028

Google Scholar

Google Scholar

[22] Tshitoyan V, Dagdelen J, Weston L, Dunn A, Rong Z Q, Kononova O, Persson K A, Ceder G, Jain A 2019 Nature 571 95

Google Scholar

Google Scholar

[23] Breiman L 2001 Mach. Learn. 45 5

Google Scholar

Google Scholar

[24] Wu Y R, Li H P, Gan X S 2013 Adv. Mater. Res. 848 122

Google Scholar

Google Scholar

[25] 孙涛, 袁健美 2023 72 028901

Google Scholar

Google Scholar

Sun T, Yuan J M 2023 Acta Phys. Sin. 72 028901

Google Scholar

Google Scholar

[26] Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Blondel M, Prettenhofer P, Weiss R, Dubourg V, Vanderplas J, Passos A, Cournapeau D, Brucher M, Perrot M, Duchesnay É 2011 J. Mach. Learn. Res. 12 2825

Google Scholar

Google Scholar

-

表 1 超参数取值

Table 1. Hyperparameter value.

超参数名称 含义 值 $ {d}_{{\rm{m}}} $ 元素特征构造得到的向量维度 512 $ {N}_{{{f}}} $ 化学式中最大元素种类 7 N 注意力机制层数 3 n 注意力机制头数 4 I 参与训练的元素种类和 89 T 图卷积层数 3 $ {V}_{{\rm{c}}{\rm{g}}} $ 节点嵌入后元素向量维度 16 $ {w}_{1} $∶$ {w}_{2} $ 权重比参数 7:3 Epochs 最大迭代次数 300 batch_size 批处理大小 256 表 2 元素嵌入法测试结果(单位: eV)

Table 2. Elemental embedding method test results (in eV).

元素嵌入方法 Train MAE Val MAE Test MAE One-Hot 0.185 0.423 0.433 Magpie 0.428 0.546 0.566 Mat2vec 0.203 0.408 0.420 表 3 深度学习模型测试结果(单位: eV)

Table 3. Deep learning model test results (in eV).

Train MAE Val MAE Test MAE CGCNN 0.502 0.605 0.601 Roost 0.178 0.447 0.455 CrabNet 0.226 0.422 0.427 HotCrab 0.177 0.422 0.440 CGCrabNet 0.187 0.408 0.413 表 4 回归模型参数

Table 4. Regression model parameters.

机器学习方法 超参数名称 取值 RF 子学习器数量 90 SVR 核函数 多项式核 多项式核次数 3 正则化强度 2 伽马参数 2 零系数 1.5 GBR 子学习器数量 500 学习率 0.2 最大深度 4 损失函数 绝对误差函数 表 5 钙钛矿材料预测值和计算值对比 (单位: eV)

Table 5. Comparison of predicted and calculated values for perovskite materials (in eV).

化学式 带隙计算值 CGCrabNet RF SVR GBR NbTlO3 0.112 0.658 1.458 1.296 1.614 ZnAgF3 1.585 1.776 1.836 2.194 1.840 AcAlO3 4.102 3.212 2.881 3.197 2.963 BeSiO3 0.269 1.116 2.813 2.963 3.777 TmCrO3 1.929 1.682 1.612 1.987 1.668 SmCoO3 0.804 0.644 0.821 1.043 0.724 CdGeO3 0.102 0.586 0.911 1.675 0.196 CsCaCl3 5.333 4.891 4.918 5.116 5.157 HfPbO3 2.415 2.724 1.733 2.346 1.967 SiPbO3 1.185 1.327 1.407 1.543 1.079 SrHfO3 3.723 3.683 2.821 3.370 3.253 PrAlO3 2.879 3.139 2.665 2.091 2.984 BSbO3 1.405 1.123 0.653 -0.025 0.579 CsEuCl3 0.637 0.388 1.500 4.477 0.949 LiPaO3 3.195 3.100 2.443 -0.306 2.553 PmErO3 1.696 1.309 1.550 1.682 1.252 TlNiF3 3.435 2.806 2.063 3.049 3.255 MgGeO3 3.677 1.256 0.979 1.623 1.073 NaVO3 0.217 0.785 0.911 0.180 0.989 RbVO3 0.250 0.616 1.736 0.290 1.534 KZnF3 3.695 3.785 2.853 3.203 3.295 NdInO3 1.647 1.587 1.653 0.889 1.590 RbCaF3 6.397 6.974 6.482 6.372 6.028 RbPaO3 3.001 2.952 2.864 -0.234 2.937 PmInO3 1.618 1.480 1.896 1.222 1.754 KMnF3 2.656 2.991 2.647 2.428 2.730 NbAgO3 1.334 1.419 1.369 1.227 1.265 CsCdF3 3.286 3.078 2.990 2.724 2.879 KCdF3 3.101 3.125 2.789 2.365 2.990 CsYbF3 7.060 6.641 6.325 6.523 6.736 NaTaO3 2.260 1.714 1.680 2.093 1.715 CsCaF3 6.900 6.874 6.291 6.416 6.379 RbSrCl3 4.626 4.470 4.966 4.647 4.795 AcGaO3 2.896 3.199 2.740 2.869 2.981 BaCeO3 2.299 1.789 3.918 2.696 3.655 注: CGCrabNet, RF, SVR和GBR分别代表特征融合神经网络、随机森林回归、支持向量回归和梯度提升回归模型计算得到的带隙值. -

[1] 范晓丽 2015 中国材料进展 34 689

Google Scholar

Google Scholar

Fan X L 2015 Mater. China 34 689

Google Scholar

Google Scholar

[2] 万新阳, 章烨辉, 陆帅华, 吴艺蕾, 周跫桦, 王金兰 2022 71 177101

Google Scholar

Google Scholar

Wan X Y, Zhang Y H, Lu S H, Wu Y L, Zhou Q H, Wang J L 2022 Acta Phys. Sin. 71 177101

Google Scholar

Google Scholar

[3] Xie T, Grossman J C 2018 Phys. Rev. Lett. 120 145301

Google Scholar

Google Scholar

[4] Chen C, Ye W K, Zuo Y X, Zheng C, Ong S P 2019 Chem. Mater. 31 3564

Google Scholar

Google Scholar

[5] Karamad M, Magar R, Shi Y T, Siahrostami S, Gates L D, Farimani A B 2020 Phys. Rev. Materials 4 093801

Google Scholar

Google Scholar

[6] Jha D, Ward L, Paul A, Liao W K, Choudhary A, Wolverton C, Agrawal A 2018 Sci. Rep. 8 17593

Google Scholar

Google Scholar

[7] Goodall R E A, Lee A A 2020 Nat. Commun. 11 6280

Google Scholar

Google Scholar

[8] Wang A Y T, Kauwe S K, Murdock R J, Sparks T D 2021 NPJ Comput. Mater. 7 77

Google Scholar

Google Scholar

[9] 胡扬, 张胜利, 周文瀚, 刘高豫, 徐丽丽, 尹万健, 曾海波 2023 硅酸盐学报 51 452

Google Scholar

Google Scholar

Hu Y, Zhang S L, Zhou W H, Liu G Y, Xu L L, Yin W J, Zeng H B 2023 J. Chin. Chem. Soc. 51 452

Google Scholar

Google Scholar

[10] Guo Z, Lin B 2021 Sol. Energy 228 689

Google Scholar

Google Scholar

[11] Gao Z Y, Zhang H W, Mao G Y, Ren J N, Chen Z H, Wu C C, Gates I D, Yang W J, Ding X L, Yao J X 2021 Appl. Surf. Sci. 568 150916

Google Scholar

Google Scholar

[12] Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez A N, Kaiser L, Polosukhin I 2017 arXiv: 1706.03762v5 [cs. CL

[13] Nix D A, Weigend A S 1994 Proceedings of 1994 Ieee International Conference on Neural Networks (ICNN’94) Orlando, FL, USA, 28 June–02 July, 1994 p55

[14] You Y, Li J, Reddi S, et al. 2020 arXiv: 1904.00962v5 [cs. LG

[15] Smith L N 2017 arXiv: 1506.01186v6 [cs. CV

[16] Saal J E, Kirklin S, Aykol M, Meredig B, Wolverton C 2013 JOM 65 1501

Google Scholar

Google Scholar

[17] Jain A, Ong S P, Hautier G, Chen W, Richards W D, Dacek S, Cholia S, Gunter D, Skinner D, Ceder G, Persson K A 2013 APL Mater. 1 011002

Google Scholar

Google Scholar

[18] Yamamoto T 2019 Crystal Graph Neural Networks for Data Mining in Materials Science (Yokohama: Research Institute for Mathematical and Computational Sciences, LLC

[19] Kirklin S, Saal J E, Meredig B, Thompson A, Doak J W, Aykol M, Rühl S, Wolverton C 2015 NPJ Comput. Mater. 1 15

Google Scholar

Google Scholar

[20] Calfa B A, Kitchin J R 2016 AIChE J. 62 2605

Google Scholar

Google Scholar

[21] Ward L, Agrawal A, Choudhary A, Wolverton C 2016 NPJ Comput. Mater. 2 16028

Google Scholar

Google Scholar

[22] Tshitoyan V, Dagdelen J, Weston L, Dunn A, Rong Z Q, Kononova O, Persson K A, Ceder G, Jain A 2019 Nature 571 95

Google Scholar

Google Scholar

[23] Breiman L 2001 Mach. Learn. 45 5

Google Scholar

Google Scholar

[24] Wu Y R, Li H P, Gan X S 2013 Adv. Mater. Res. 848 122

Google Scholar

Google Scholar

[25] 孙涛, 袁健美 2023 72 028901

Google Scholar

Google Scholar

Sun T, Yuan J M 2023 Acta Phys. Sin. 72 028901

Google Scholar

Google Scholar

[26] Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Blondel M, Prettenhofer P, Weiss R, Dubourg V, Vanderplas J, Passos A, Cournapeau D, Brucher M, Perrot M, Duchesnay É 2011 J. Mach. Learn. Res. 12 2825

Google Scholar

Google Scholar

计量

- 文章访问数: 8646

- PDF下载量: 206

- 被引次数: 0

下载:

下载: